Using HAProxy as a reverse proxy for AWS microservices

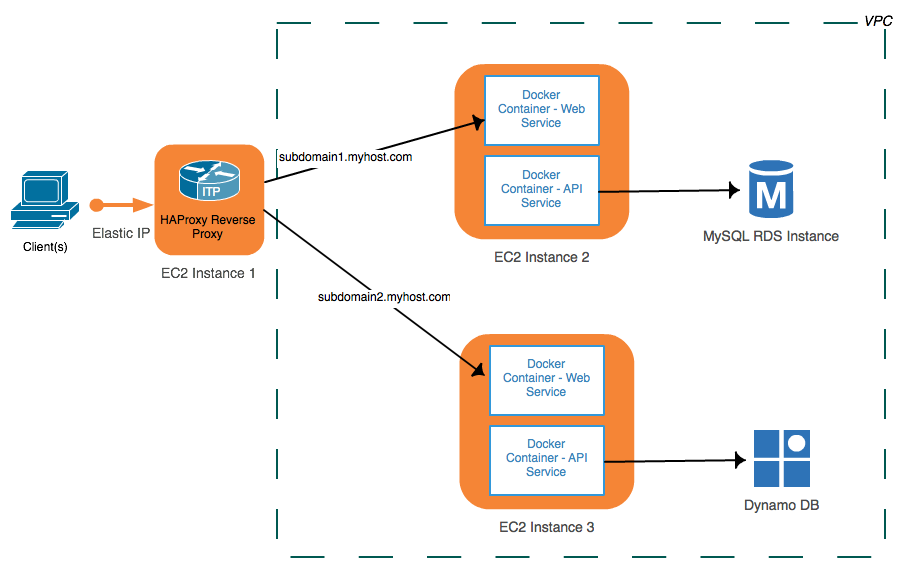

Amazon’s EC2 micro instances offer a very affordable option for hosting a Docker-based micro-services architecture. Each micro instance can host several Docker containers. For example, you may have a simple Node.js-based web application that you would like exposed as subdomain1.myhost.com and another Java/Tomcat webapp surfaced at subdomain2.myhost.com. Each could be hosted through a separate (and perhaps clustered) Docker container, with additional containers used for exposing API-services. The architecture might resemble:

Notice the use of HAProxy, which is being used in this instance as a load balancer and reverse proxy. It is being housed on its own micro-EC2 instance, and will direct inbound traffic to its destination based-upon subdomain routing rules (HAProxy supports a wide variety of routine rules, not unlike Apache mod_proxy). Both DNS entries for subdomain1 and subdomain2 will direct traffic to the same public IP address, which in this case is intercepted by the HAProxy. Configuration routing rules will then forward the inbound request to the appropriate Docker container that is hosting the web site.

Configuring HAProxy is very straightforward, which may explain its popularity within the open source community (learn more about HAProxy at http://www.haproxy.org/). Let’s look at an example HAProxy configuration file that would support the above architecture (haproxy.cfg is typically found at /etc/haproxy). We’ll start with the two global and default sections, which you likely can leave “as-is” for most scenarios:

global

maxconn 4096

user haproxy

group haproxy

daemon

log 127.0.0.1 local0 debug

defaults

log global

mode http

option httplog

option dontlognull

retries 3

option redispatch

option http-server-close

option forwardfor

maxconn 2000

timeout connect 5s

timeout client 15min

timeout server 15minNext, we’ll move on to the “frontend” section, which is used to describe a set of listening sockets accepting client connections and is used to configure the rules for the IP/port forwarding that will redirect traffic to an appropriate host for processing.

frontend public

bind *:80

# Define hosts

acl host_subdomain1 hdr(host) -i subdomain1.myhost.com

acl host_subdomain2 hdr(host) -i subdomain2.myhost.com

## figure out which one to use

use_backend subdomain1 if host_subdomain1

use_backend subdomain2 if host_subdomain2

The “bind” directive is used to indicate which port HAProxy will be listening (in this case, port 80). The “acl” directives define the criteria for how to route the inbound traffic. The first parameter that follows the directive is simply an internal name for future referencing, with the remainder of the parameters defining the methods used for matching some element of the inbound request that is being used as the basis for routing (the HAProxy docs provide more details). In this case, the first “acl” directive is simply stating that if the inbound request has a hostname of “subdomain1.myhost.com”, then assign the match to acl identifier called “host_subdomain1”. Basically, we are simply defining the criteria for matching the inbound request.

The next part of this section is the “use_backend” directive, which assigns the matching “acl” rule to a specific host for processing. So, in the first example, it’s saying that if the “acl” match name for the inbound request is assigned to “host_subdomain1”, then use the backend identified by “subdomain1”. Those “backends” are defined in the section that follows:

backend subdomain1

option httpclose

option forwardfor

cookie JSESSIONID prefix

server subdomain-1 someawshost.amazonaws.com:8080 check

backend subdomain2

option httpclose

option forwardfor

cookie JSESSIONID prefix

server subdomain-2 localhost:8080 checkIn the first “backend” directive, we are defining the server backend used for processing that was identified through the assigned name “subdomain1” (these are just arbitrary names – just be sure to match what you have in the frontend directive). The “server” directive (which uses an arbitrary name assignment as the first parameter) is then used to identify which host/port to forward the request, which in this case is the host someawshost.amazonaws.com:8080 (the check parameter is actually only relevant when using load balancing). You can have multiple server directives defined if you are using load balancing/clustering.

There are a multitude of configuration options available for HAProxy, but this simple example illustrates how easily you can use it as a reverse proxy, redirecting inbound traffic to its final destination.

APIs & Microservices

One of the challenges in devising a API solution that runs using microservices is how to “carve” up the various service calls so that they can hosted in separate Docker containers. Using the subdomain based approach (i.e., subdomain.myhost.com) I described earlier for standard web applications isn’t really practical when you want to convey a uniform API to your development community. However, it can still be accomplished in much the same fashion using URL path-based reverse proxy rules. For example, consider these two fictitious API calls:

GET api.mycompany.com/contracts/{contractId}

GET api.mycompany.com/customers/{customerId}In the first example, this is a RESTful GET call that retrieves a given contract JSON object based upon a contractId that was specified. A similar pattern is used in example 2 for fetching a customer record. As you can see, the first path element is really the “domain” associated with the web service call. This could be used as a basis for separating those domains calls into their own separate microservice Docker container(s). Once nice benefit to doing so is that one of those domains may typically experience more traffic than the other. By splitting them into separate services, you can perform targeted scaling.

If you enjoyed this article, please link to it accordingly.

| Reference: | Using HAProxy as a reverse proxy for AWS microservices from our JCG partner Jeff Davis at the Jeff’s SOA Ruminations blog. |