Mastering Program Flow: Understanding Thread Execution

Programs may seem magical, churning out results with a click. But behind the scenes, a fascinating dance unfolds. Imagine a bustling city – cars navigate streets, pedestrians cross paths, all in a coordinated flow. This intricate dance is the thread of execution, the very lifeblood of how programs operate.

In this guide, we’ll peel back the layers and unveil the secrets of thread execution. We’ll delve into the world of threads, processors, and how instructions are meticulously carried out, one step at a time. Buckle up, as we unlock the mysteries behind how your programs truly come alive!

1. The Stage is Set – Understanding the Building Blocks

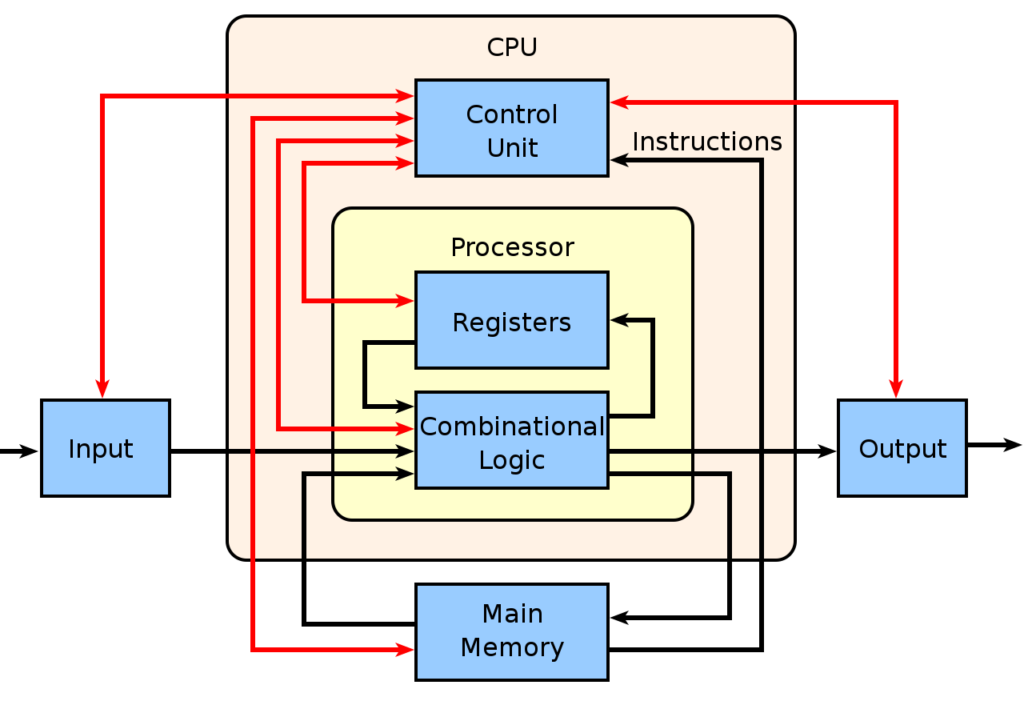

Imagine a computer as a bustling city. To make things happen, you need a central hub coordinating everything – that’s the role of the CPU (Central Processing Unit). Just like the brain in our bodies, the CPU acts as the computer’s control center, carrying out instructions and managing all the computations that make your programs run.

Instructions: The Blueprint for Action

Think of programs like recipes. They consist of a series of steps, or instructions, that the CPU needs to follow in a specific order. These instructions are written in a language the CPU understands, like machine code, and they tell it exactly what to do – add numbers, compare values, or store data.

Memory: The City’s Storage Units

Now, a city needs warehouses to store things, and a computer has memory for that purpose. Memory is like a giant notepad where the CPU stores both the program’s instructions (the recipe) and the data it needs to work with (the ingredients). There are two main types of memory:

- RAM (Random Access Memory): This is the city’s temporary storage. It’s fast and easily accessible by the CPU, but it’s cleared out when the computer restarts. This is where the CPU keeps the instructions and data it’s currently working on.

- Storage (Hard Drive, SSD): This is the city’s long-term storage. It’s slower than RAM but holds information even when the computer is turned off. This is where programs and data are stored permanently.

The CPU constantly interacts with memory, fetching instructions one by one, decoding them (understanding what they mean), and then executing them (carrying out the operation). This cycle continues until the entire program is finished.

2. The Actors Take Center Stage – Threads and Processes

In our journey to understand program flow, we’ve explored the CPU, instructions, and memory – the essential building blocks. But what if a program needs to do multiple things at once? Enter the world of threads and processes!

Threads: The Mini-Me of Programs

Imagine a program as a restaurant. A single thread would be like one chef handling all the orders – taking requests, cooking, and plating. It works, but it can be slow. This is where threads come in. Threads are like mini-chefs within the program, each capable of handling a specific task concurrently.

Here’s a simple code snippet (Java) to illustrate the concept:

import java.util.Scanner;

public class RestaurantThreads {

static class CookFoodThread extends Thread {

private String order;

public CookFoodThread(String order) {

this.order = order;

}

@Override

public void run() {

try {

// Simulate cooking time

Thread.sleep(2000); // 2 seconds

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("Cooked " + order);

}

}

public static void main(String[] args) {

Scanner scanner = new Scanner(System.in);

while (true) {

System.out.print("Enter order (or 'q' to quit): ");

String order = scanner.nextLine();

if (order.equalsIgnoreCase("q")) {

break;

}

// Create a new thread to cook the order

Thread cookThread = new CookFoodThread(order);

cookThread.start();

}

}

}

In this example, we have two threads:

take_orders: This thread keeps taking new orders from the user.cook_food: This thread cooks the specified order.

By using multiple threads, the program can take orders and cook food simultaneously, improving efficiency.

Processes: Heavyweight Champions

Now, a single restaurant can have multiple chefs (threads), but it’s still one establishment (process). A process is a self-contained program execution instance. It has its own memory space, resources, and can run independently. Imagine a food court with multiple restaurants (processes), each with its own kitchen (memory space) and staff (threads).

The Relationship Between Threads and Processes

One process can have multiple threads, but one thread can only belong to one process. Threads share the memory space of their parent process, making communication and data sharing easier. Processes, on the other hand, are isolated from each other, ensuring stability (a crashing process won’t affect others).

Multithreading: The Power of Many

Multithreading is the technique of using multiple threads within a single process. It allows a program to handle multiple tasks concurrently, leading to several benefits:

- Improved Performance: By utilizing multiple threads, a program can potentially do more work in the same amount of time.

- Increased Responsiveness: If a program is waiting for something (like user input or data from a network), other threads can continue working, keeping the program responsive.

However, multithreading can introduce complexity and requires careful management to avoid conflicts between threads accessing shared data.

So, threads are like mini-chefs within a program, processes are independent restaurants, and multithreading lets you leverage the power of both for a more efficient and responsive program flow.

3. The Choreography Unfolds – Following the Thread of Execution

The program counter plays a crucial role in the CPU’s core operation cycle, often referred to as the fetch-decode-execute cycle. This cycle is the heart of program execution, where instructions are retrieved, understood, and carried out:

1. Fetch:

- The CPU uses the program counter (PC) to identify the address of the next instruction in memory.

- It sends a request to memory to retrieve that instruction.

- The retrieved instruction is loaded into a dedicated register within the CPU.

2. Decode:

- The CPU analyzes the retrieved instruction to understand its purpose. This includes decoding the operation it needs to perform (addition, comparison, etc.) and identifying any operands involved (data to be used).

3. Execute:

- Based on the decoded instruction, the CPU performs the specified operation. This could involve calculations, data manipulation, or control flow changes (like jumping to another instruction).

- After execution, the program counter (PC) is typically incremented to point to the next instruction in sequence, unless the instruction itself specifies a jump or branch.

This fetch-decode-execute cycle repeats continuously until the entire program is finished. The PC acts as a guide, ensuring the CPU processes instructions in the correct order.

Juggling Acts: The Role of Context Switching in Multithreading

When a program utilizes multiple threads, things get more interesting. The CPU needs to manage the execution of these threads efficiently. This is where context switching comes into play.

- Context switching involves saving the state of the currently running thread (including the program counter value) and loading the state of another thread.

- The CPU then switches to executing the other thread, continuing from where it left off.

- This process allows the CPU to appear as if it’s executing multiple threads simultaneously, even though it can only truly execute one instruction at a time.

By rapidly switching context between threads, the CPU provides the illusion of parallel execution, leading to improved program responsiveness.

Interruptions: External Influences on Program Flow

While the fetch-decode-execute cycle forms the core of program execution, there can be external influences that alter the flow. Interrupts are signals sent to the CPU from external devices (like timers) or hardware events (like keyboard input).

- When an interrupt occurs, the CPU temporarily halts the current program’s execution and saves its state.

- It then handles the interrupt by executing a specific set of instructions associated with that interrupt.

- Once the interrupt is serviced, the CPU restores the saved state of the program it was running and resumes execution from where it left off.

Interrupts play a vital role in ensuring the smooth operation of a computer system, allowing it to respond to external events and maintain responsiveness.

4. Mastering the Flow – Optimizing Your Code

Understanding the intricate dance of threads within your program isn’t just about satisfying your inner computer geek; it’s a superpower for code optimization! Here’s how:

1. Identifying Bottlenecks:

By knowing how threads interact with the CPU and memory, you can pinpoint sections of your code where thread scheduling or data access might be creating bottlenecks. Analyzing the fetch-decode-execute cycle can help you identify areas where instructions are waiting for data from other threads or memory, potentially slowing things down.

2. Leveraging Parallel Processing Power:

Multithreading allows your program to tackle multiple tasks concurrently, improving performance. But it’s a double-edged sword. If threads compete for the same resources or data, chaos can ensue. Understanding thread execution helps you design code that efficiently utilizes the processing power of multiple cores, ensuring threads don’t get stuck waiting for each other.

3. Synchronization Strategies:

In multithreaded environments, ensuring data consistency is crucial. Imagine two threads trying to update the same bank account balance at the same time – disaster! Understanding thread execution empowers you to implement effective synchronization mechanisms (like mutexes or semaphores) to control access to shared data and prevent race conditions (conflicting access attempts). (Optional: You can briefly explain mutexes and semaphores here)

4. Avoiding Deadlocks:

Deadlocks occur when two or more threads are waiting for each other to release resources, creating a stalemate. Understanding how context switching works and the potential pitfalls of thread interaction helps you design code that avoids deadlock scenarios.

5. Choosing the Right Approach:

Not every program needs multithreading. Sometimes, a well-optimized single-threaded approach might be more efficient than the complexity of threads. Knowing how thread execution works allows you to make informed decisions about when multithreading is beneficial and when it’s best to stick with a simpler approach.

5. Craving More Knowledge? Dive Deeper!

The world of thread execution is vast and fascinating. Here are some resources to fuel your learning journey:

- Books:

- “Concurrent Programming” by Hennessy and Patterson (classic text)

- “Java Concurrency in Practice” by Brian Goetz et al. (Java-specific)

- Online Courses:

- Coursera: “Concurrent Programming” by University of Illinois at Urbana-Champaign

- edX: “Introduction to Concurrency” by MIT

- Websites:

- The Java™ Tutorials: Concurrency (https://docs.oracle.com/javase/tutorial/essential/concurrency/)

- The Art of Concurrency Programming (https://www.amazon.com/Concurrent-Programming-International-Computer-Science/dp/0201544172)

6. Conclusion

In conclusion, understanding the thread of execution equips you to be a code optimization ninja. By knowing how the CPU executes instructions and manages threads, you can identify bottlenecks, leverage parallel processing power, and write thread-safe code. This knowledge empowers you to make informed decisions about when multithreading benefits your program and when a simpler approach reigns supreme. So, keep learning and unlock the full potential of your code!