Dynamics of building a self-organising network logic

To build a “knowledge & intelligent system”, I have come to believe that we need to build an organic-based system that develops intelligence naturally rather than using silicon-based system that needs rigid pre-coded algorithms that do not scale and have very less room to manoeuvre. There are many advantages to building it as an “organic-based” system that work with data-realised algorithms that drive logic-based intelligence. The many advantages becomes evident as we examine the dynamics of building a “self-organising network logic”.

In the previous blog, I had written that we need to trigger the creation of a self-organising network from the behaviour of the filtered data. This network needs to be built using heterogenous adaptable building blocks that can adapt themselves to perform different functions and achieve a certain logic when they come together into a network. The obvious questions that arise are: What should be the bare minimum step that needs to be achieved by the building block? What does adaptable mean? What should be the characteristics of such a building block? Is it even possible for us to build such a building block?

We can learn from the mitochondrial network to understand how the network is created using mitochondria. If we can understand what makes the mitochondria an “adaptable building block”, we can build a working model that works on the principles used in mitochondria. In-short, the cristae in mitochondria are remodelled due to various factors. This remodelling affects the mitochondrial matrix volume present within the cristae, controlling the other sub-organelles present in the the matrix affecting the behaviour of the mitochondria. But, looking beyond the specifics and looking at the principle behind it, we find this operation is similar to creation of a molecular structure, the mitochondria is also able to alter its form and structure and hence its own working based on some set of input parameters represented by proteins and enzymes. This makes it adaptable and the functions adapted by the variation are the basic functions achieved.

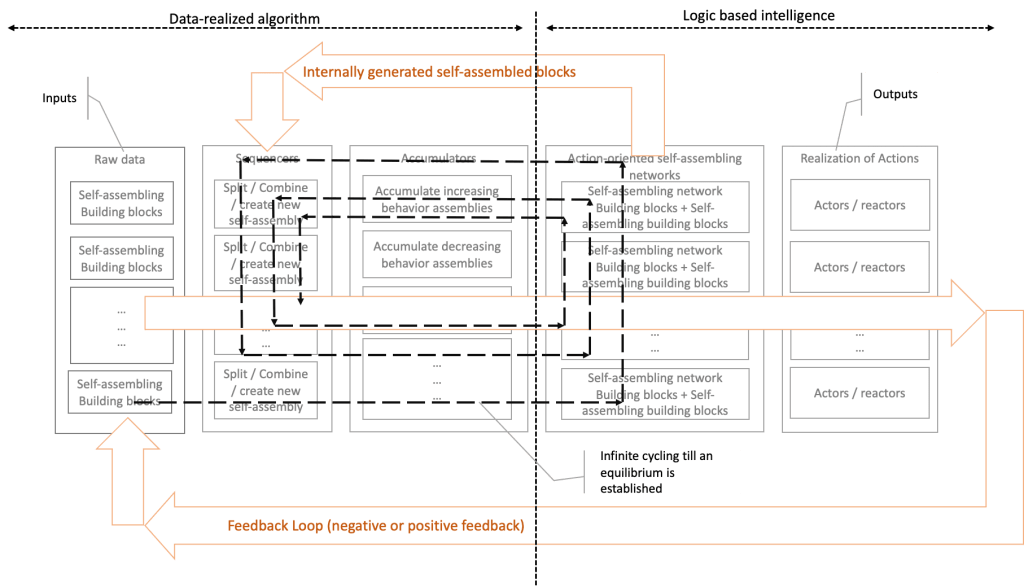

But, we can see this in different manner. We can abstract it further and look at the “various parameters” that affect the remodelling of the cristae as “data”, the “cristae remodelling” itself similar to the molecular structure formed and the network itself a sequencing and behaviour of the structure. We find that this process is similar to the original data-realised algorithm, namely a data effected structure leading to a sequencing and triggering a certain behaviour leading to the formation of network, which in-turn creates another data-effected structure and this goes on. This has establishes a recursive loop implemented using form-variations shown below:

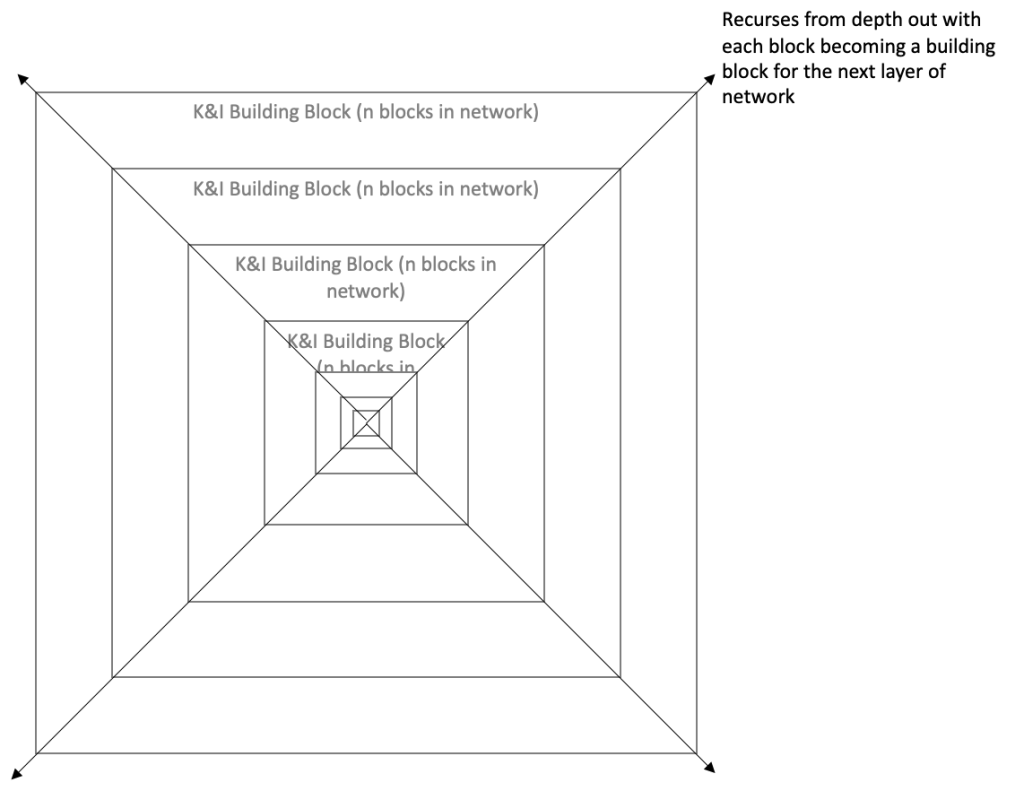

This recursion ends when the proteins created do not invoke any change in behaviour of any self-organising network and thus forming an equilibrium in the system. From this we find, while we call the “self-organising network” as logic-based intelligence, it is driven by the same concepts as the data-realised algorithm. By effectively applying the data-realised algorithm, the data-realised algorithm has converted to a logic-based intelligence for the given context. What makes such a system really effective is the recursive nature of the system. This system can recurse in all directions. In the above, I have just described how a single flow from raw-data to self-organising network recurses within itself and establishes equilibrium to create a single knowledge & intelligence. But, we can in-turn look at this whole “recursive piece” as a single building block (I will call it a K&I Building Block). Using many such building blocks (K&I building block) another higher network can be formed. If a coherence and equilibrium can be established in this network, then the network becomes a higher level of intelligence over the lower levels. This can recurse depth-wise. Similar to below:

This effectively mimics the infinite-to-finite character that is present in nature as I have indicated in my blog on the missing depth dimension.

In-short we find that “organic-based processes” have evolved to effectively convert data present as energy-variations to form-variations and vice-versa. This has induced a natural occurrence of knowledge and intelligent whose output is got by converting the knowledge & intelligence to action. Different types of networks form different types of intelligence and knowledge resulting in different outputs. Even more interesting here is the way fuzziness is achieved in such a system. Given that “data” drives the “form”, fuzziness can be represented by the variation in the strength of the form based on the volume of data present. If the form is weak, i.e., when not-enough data is present to create a firm form, then the subsequent data can very easily modify the weak form according to its needs. Whereas if enough data is present to create a firm-form, the form is hardened and continuously maintains the network and the subsequent logic. The weak form gives us the fuzziness in the logic formed. To further appreciate the beauty of such a system, we need to understand more of the dynamics of such a system comparing to computers.

In computers “an instruction” is the bare minimum step. Multiple instructions are combined in various forms to achieve a logic. It should be recognised that the instructions are always sequentially processed, hence we have the concept of threads that time slices to achieve the illusion of parallel processing. But, when there is only one processor present, it is still only sequential processing. The illusion of multi-threading is achieved because it has interleaved multiple instructions of multiple sequences. The instructions themselves are rigid and pre-coded into the hardware of the processor to trigger a certain sequence of operation to achieve a result. Thus when interleaving occurs, they maintain the isolation between the various algorithms. For example an “AND” instruction is pre-wired as a set of logic gates working in unison to give the correct result. Any changes to this pre-wired result is not possible with that given circuit. A “NOR” instruction is another such pre-wired set of logic gates. Interleaving of “AND” and “NOR” instruction means, either the “AND” pre-wired logic executes or the “NOR” instruction executes, there is no intermediate state of execution. Inherently these instructions trigger nothing else. They are static in nature. In such a pre-wired, circuit driven approach, there needs to be an external source to power the execution of the instructions and control the speed of execution of the logic. What is coded is what is got, nothing more.

This is distinctly different from what happens in logic created using a self-organising network. Here, an adaptable network represents logic as opposed to a sequential string of pre-wired instructions. The speed of execution in this kind of a system is controlled by the presence and volume of data rather than some externally maintained clock. If the specific data that triggers a behaviour is available, then the part of the network related to that data stream is activated else it is not. Here, parallel takes on a totally different meaning. If multiple streams of data that trigger multiple behaviours that affect the same network is present, they activate multiple parts of the same network. But, given that it is the same network, the logic of the network reacts as a whole to all the multiple streams of data, which implies that an automatically combined logic is executed rather than interleaving of un-mixable rigid instructions. But, if the data streams trigger behaviours that affect different networks, then the networks execute the logic in parallel without affecting each other. This kind of behaviour is what we need to implement adaptable intelligence, that can adapt itself to relevant situations.

Another aspect to be noted here is related to the power driving such a system. As I have indicated before computers with pre-coded logic need external power sources to drive the execution. But, here we find that the inherent energy of the building blocks are used and driven by the amount of data present. Meaning, if we took molecular structure formation, the variations in the nuclear energy or the energy of the data drives the structure formation, if we looked at the mitochondria network, the energy generated by proteins or the energy from ATP drives the network formation and so on. The advantage of such a energy utilisation is that just enough energy for the required action is generated and used.

Published on Java Code Geeks with permission by Raji Sankar, partner at our JCG program. See the original article here: Dynamics of building a self-organising network logic Opinions expressed by Java Code Geeks contributors are their own. |