Data-realised algorithms vs logic-based algorithms

In my previous blog I had mentioned how it is better for us to leave the data as is in the various formats it is present, as is, instead of collecting them periodically by trying to convert them into a rigid format, thus losing information present in the data. The obviously immediate question that arises is “The data is already present, we all know! But, how can we use the information and knowledge present in them to create intelligence?” As I had mentioned, this is where we need to start thinking about “data realised algorithms” rather than always being a logic-based algorithm.

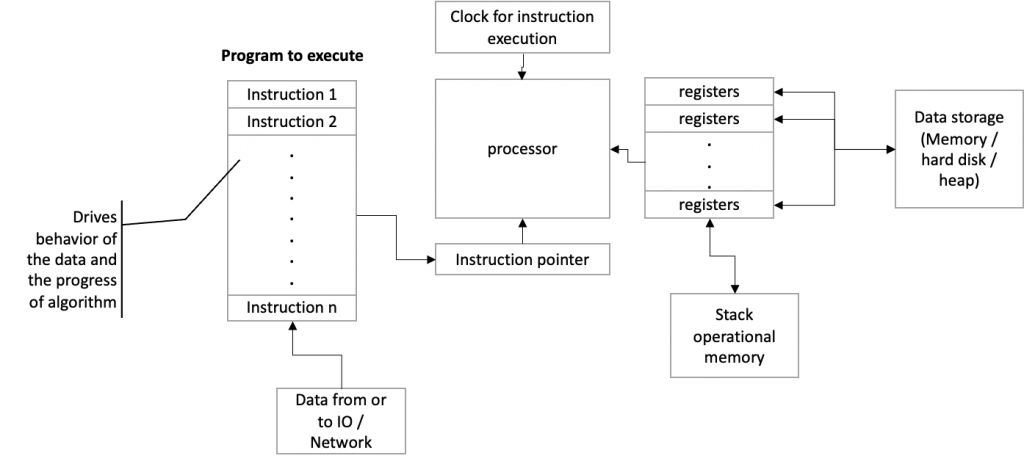

To understand what a data-realised algorithm is, we need to first understand what a logic-based algorithm is first. If we look at how computers work, we find that the processor takes a precedence. A very high level architecture is shown as below:

Here, the set of instructions are the first to be created. The processor executes these instructions one after another. To these instructions data is given as an input from registers which can be loaded from an operational stack or a data storage location. Or these instructions generate data as an output into registers and store them back into data storage locations or operational stacks. In an application written for such an architecture, the logic that is present in the sequence of instructions drives the flow of data state. So, logic comes first, data is subsequent, sometimes stored, sometimes thrown away. Such applications work well when the data needs to follow a well-defined state flow and the data is created and modified by this or related similar application from which the data is imported.

When we look at a “knowledge & Intelligence system” which is an AI, the data contains the knowledge that has to drive the intelligence or logic that has to be created. For such a system, the only instructions that can be written is that of an observer that can retrieve this “knowledge in the data”. The subsequent logic that needs to be executed to adapt the knowledge as intelligence to a situation needs to be driven by the data of that situation and the derived knowledge directly. For this type of an “application (I will still call it an application)”, data has to take precedence and logic created from data. We try to do this by creating what we call a “learning logic”. But then a learning logic is also a “logic first”, data subsequent application. How do we know what to learn from the data if we do not know the type of knowledge that is present in the data? Hence we end up forcing our inherent logic or an expert’s opinion on data and missing out on knowledge by excluding data as outliers. We have not learnt all the knowledge present in the data, we have learnt logic that we expect to be learnt from that data, which is no more knowledge or intelligence.

When data starts taking precedence driving logic, it becomes a data-realised algorithm. As I had explained in my blog on “What is logic in relation to AI?“, logic has to change with data. In a logic-based algorithm, logic remains constant and the data adapts itself around logic, while in a data-realised algorithm, data remains what it is and logic adapts itself around the data. Only then will be able to gather the knowledge that is present in data and start building on that knowledge. But, how can logic become algorithm? How do we create such a system? What is logic in such a system?

As I had indicated before, we need to start rethinking our definitions of algorithm and logic. The definition of logic for us is a sequence of instructions that our processor can understand. Hence, we seem to be trying to invent more and more complex sequence of instructions calling them algorithms giving it the reason of optimisation, performance and what not, what not. Building over them, distributing them, across processors, across computers regions, making it accessible across the world and many such combinations.

But, if we really examined the whole thing, even the simplest “sequence of change in data” becomes logic. If I observe “wind blowing” and accumulate the changes in parameters that I observe of the wind such as “direction of blowing”, “speed at which it is blowing”, “rate of change of speed” and continuously observed a certain repetition in those parameters over time, that became logic. When looked at from that perspective, the potential of logic is present all over the world, it just needs the correct observer to realise that potential of logic to turn it into actual logic. We created sensors as just that “sense-able devices of single parameters” rather than observers. Had we created them as “observers” that “observe the continuous multi-dimensional changes occurring” and output similar to the protein created, “as a 3D rendering encoding of the knowledge”, possibly a molecular structure where the molecular bonds, their strength and the atoms combined is indicative of the changes detected, we would have realised knowledge from data. What is needed is a repeatable molecular structure that can be formed for the same logic of changes detected. One example can be that the same strength hydrogen bond is established when the heat parameters are the same. Subsequently we just had to tap into that “knowledge” as and when required to convert it into intelligence.

This allows us to create a system that is not dependant on mathematical equations that we create or model prior to knowing the data, yet we are able to encode knowledge based on the data present, that can be utilised to create intelligence. Varying the data now automatically creates the appropriate knowledge, rather than having to relearn a whole lot of mathematical equations, their weights and biases. What is more important to note here is that while the mathematical equation as we have created them using a language is not used in the system, the mathematical concepts are still utilised, only in its original form i.e., as it is present in nature. Hence the limitations of our understanding of mathematical equations do not become limitations of the knowledge of AI.

In my view the foremost that needs to be thought of and implemented as a primary need of any AI system is to find an adaptable representation of knowledge and how to create a system that can implement that representation of knowledge. Only subsequent to this should we think how that knowledge representation can be utilised to create intelligence according to the given situation.

Published on Java Code Geeks with permission by Raji Sankar, partner at our JCG program. See the original article here: Data-realised algorithms vs logic-based algorithms Opinions expressed by Java Code Geeks contributors are their own. |