How-To: Spring Boot 2 Web Application with Multiple Mongo Repositories and Kotlin

First of all, a disclaimer: if you’re writing a microservice (which everyone does now right?) and want it to be idiomatic, you don’t normally use several different data sources in it.

Why? Well, by definition, microservices should be loosely coupled, so that they can be independent. Having several microservices writing into the same database really breaks this principle, because it means that your data can be changed by several independent actors and possibly in different ways, which makes it really difficult to speak about data consistency and also, you can hardly say that the services are independent since they have at least one common thing they both depend on: the shared (and possibly screwed) data. So, there’s a design pattern called Database Per Service which is intended to solve this problem by enforcing one service per database. And this means that every microservice serves as an intermediary between the clients and its data source, and the data can only be changed through the interface that this service provides.

However, is one service per database equal to one database per service? Nope, it isn’t. If you think about it, it’s not really the same thing.

Which means that if we have several databases that are only accessed by one microservice, and any external access to these databases is implemented through the interface of this service, this service can still be considered idiomatic. It is still one service per database, though not one database per service.

Also, perhaps you don’t care about your microservices being idiomatic at all. That’s an option too. (That will be on your conscience though.)

So, when would we have several databases that we want to access from the same service? I can think of different options:

- The data is too big to be in one database;

- You are using databases as namespaces to just separate different pieces of data that belong to different domains or functional areas;

- You need different access to the databases — perhaps one is mission-critical so you put it behind all kinds of security layers and the other isn’t that important and doesn’t need that kind of protection;

- The databases are in different regions because they are written to by people in different places but need to be read from a central location (or vice versa);

- And anything else, really, that just brought this situation about and you just need to live with it.

If your application is a Spring Boot application and you use Mongo as a database, the easiest way to go is just to use Spring Data Repositories. You just set up a dependency for mongo starter data (we’ll use Gradle project here as an example).

dependencies {

implementation("org.springframework.boot:spring-boot-starter-data-mongodb")

implementation("org.springframework.boot:spring-boot-starter-web")

implementation("com.fasterxml.jackson.module:jackson-module-kotlin")

implementation("org.jetbrains.kotlin:kotlin-reflect")

implementation("org.jetbrains.kotlin:kotlin-stdlib-jdk8")

annotationProcessor("org.springframework.boot:spring-boot-configuration-processor")

testImplementation("org.springframework.boot:spring-boot-starter-test")

}Actually, we are generating this example project with Spring Initializer, because it’s the easiest way to start a new Spring-based example. We have just selected Kotlin and Gradle in the generator settings and added Spring Web Starter and Spring Data MongoDB as dependencies. Let’s call the project multimongo.

When we created a project and downloaded the sources, we can see that the Spring created an application.properties file by default. I prefer yaml, so we’ll just rename it to application.yml and be done with it.

So. How do we set up access to our default mongo database using Spring Data? Nothing easier. This is what goes into the application.yml.

# possible MongoProperties

# spring.data.mongodb.authentication-database= # Authentication database name.

# spring.data.mongodb.database= # Database name.

# spring.data.mongodb.field-naming-strategy= # Fully qualified name of the FieldNamingStrategy to use.

# spring.data.mongodb.grid-fs-database= # GridFS database name.

# spring.data.mongodb.host= # Mongo server host. Cannot be set with URI.

# spring.data.mongodb.password= # Login password of the mongo server. Cannot be set with URI.

# spring.data.mongodb.port= # Mongo server port. Cannot be set with URI.

# spring.data.mongodb.repositories.type=auto # Type of Mongo repositories to enable.

# spring.data.mongodb.uri=mongodb://localhost/test # Mongo database URI. Cannot be set with host, port and credentials.

# spring.data.mongodb.username= # Login user of the mongo server. Cannot be set with URI.

spring:

data:

mongodb:

uri: mongodb://localhost:27017

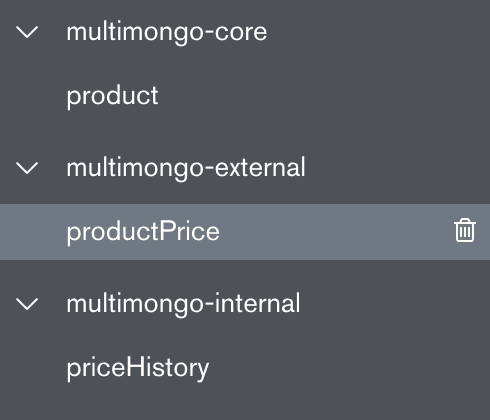

database: multimongo-coreNow, let’s imagine a very simple and stupid case for our data split. Say we have a core database that’s storing the products for our web store. Then we have data about the price of the products; this data doesn’t need any access restriction as any user on the web can see the price, so we’ll call it external. However, we also have a price history, which we use for analytical purposes. This is limited access information, so we say, OK, it goes into a separate database which we’ll protect and call internal.

Obviously, for my case all of these are still on localhost and not protected, but bear with me, it is just an example.

# Predefined spring data properties don't help us anymore.

# Therefore, we're creating our own configuration for the additional mongo instances.

additional-db:

internal:

uri: mongodb://localhost:27017

database: multimongo-internal

external:

uri: mongodb://localhost:27017

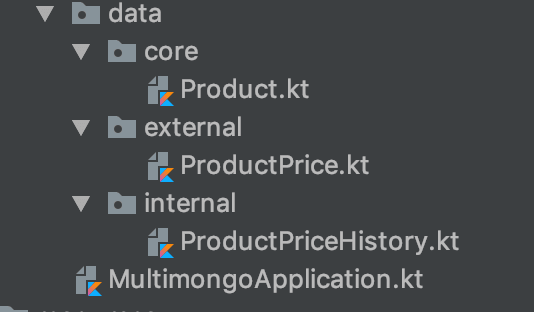

database: multimongo-externalWe will also create three different directories to keep our data access related code in: data.core, data.external, and data.internal.

Our Product.kt keeps the entity and repository for the product, the ProductPrice.kt and ProductPriceHistory.kt are representing current prices for the products and historical prices. The entities and repos are pretty basic.

@Document

data class Product(

@Id

val id: String? = null,

val sku: String,

val name: String

)

interface ProductRepository : MongoRepository<Product, String>

@Document(collection = "productPrice")

data class ProductPrice(

@Id

val id: String? = null,

val sku: String,

val price: Double

)

interface ProductPriceRepository : MongoRepository<ProductPrice, String>

@Document(collection = "priceHistory")

data class PriceHistory(

@Id

val id: String? = null,

val sku: String,

val prices: MutableList<PriceEntry> = mutableListOf()

)

data class PriceEntry(

val price: Double,

val expired: Date? = null

)

interface PriceHistoryRepository : MongoRepository<PriceHistory, String>Now, let’s create a configuration for our default mongo.

@Configuration

@EnableMongoRepositories(basePackages = ["com.example.multimongo.data.core"])

@Import(value = [MongoAutoConfiguration::class])

class CoreMongoConfiguration {

@Bean

fun mongoTemplate(mongoDbFactory: MongoDbFactory): MongoTemplate {

return MongoTemplate(mongoDbFactory)

}

}We are using a MongoAutoConfiguration class here to create a default mongo client instance. However, we still need a MongoTemplate bean which we define explicitly.

As you can see, the core configuration only scans the core directory. This actually is the key to everything: we need to put our repositories in different directories, and those repositories will be scanned by different mongo templates. So, let’s create those additional mongo templates. We’re going to use a base class that will keep some shared functionality we’ll reuse to create the mongo clients.

@Configuration

class ExtraMongoConfiguration {

val uri: String? = null

val host: String? = null

val port: Int? = 0

val database: String? = null

/**

* Method that creates MongoClient

*/

private val mongoClient: MongoClient

get() {

if (uri != null && !uri.isNullOrEmpty()) {

return MongoClient(MongoClientURI(uri!!))

}

return MongoClient(host!!, port!!)

}

/**

* Factory method to create the MongoTemplate

*/

protected fun mongoTemplate(): MongoTemplate {

val factory = SimpleMongoDbFactory(mongoClient, database!!)

return MongoTemplate(factory)

}

}And then, finally we create the two configurations to hold the mongo template instances for our external and internal databases.

@EnableMongoRepositories(

basePackages = ["com.example.multimongo.data.external"],

mongoTemplateRef = "externalMongoTemplate")

@Configuration

class ExternalDatabaseConfiguration : ExtraMongoConfiguration() {

@Value("\${additional-db.external.uri:}")

override val uri: String? = null

@Value("\${additional-db.external.host:}")

override val host: String? = null

@Value("\${additional-db.external.port:0}")

override val port: Int? = 0

@Value("\${additional-db.external.database:}")

override val database: String? = null

@Bean("externalMongoTemplate")

fun externalMongoTemplate(): MongoTemplate = mongoTemplate()

}

@EnableMongoRepositories(

basePackages = ["com.example.multimongo.data.internal"],

mongoTemplateRef = "internalMongoTemplate")

@Configuration

class InternalDatabaseConfiguration : ExtraMongoConfiguration() {

@Value("\${additional-db.internal.uri:}")

override val uri: String? = null

@Value("\${additional-db.internal.host:}")

override val host: String? = null

@Value("\${additional-db.internal.port:0}")

override val port: Int? = 0

@Value("\${additional-db.internal.database:}")

override val database: String? = null

@Bean("internalMongoTemplate")

fun internalMongoTemplate(): MongoTemplate = mongoTemplate()

}So, we now have three mongo template beans that are created by mongoTemplate(), externalMongoTemplate(), and internalMongoTemplate() in three different configurations. These configurations scan different directories and use these different mongo template beans via the direct reference in @EnableMongoRepositories annotation — which means, they use the beans they create. Spring doesn’t have a problem with it; the dependencies will be resolved in a correct order.

So, how are we to check that everything is working? There’s one more step to be done: we need to initialize some data and then get it from the database.

Since it’s just an example, we’ll create some very basic data right when the application starts up, just to see that it’s there. We’ll use an ApplicationListener for that.

@Component

class DataInitializer(

val productRepo: ProductRepository,

val priceRepo: ProductPriceRepository,

val priceHistoryRepo: PriceHistoryRepository

) : ApplicationListener<ContextStartedEvent> {

override fun onApplicationEvent(event: ContextStartedEvent) {

// clean up

productRepo.deleteAll()

priceRepo.deleteAll()

priceHistoryRepo.deleteAll()

val p1 = productRepo.save(Product(sku = "123", name = "Toy Horse"))

val p2 = productRepo.save(Product(sku = "456", name = "Real Horse"))

val h1 = PriceHistory(sku = p1.sku)

val h2 = PriceHistory(sku = p2.sku)

for (i in 5 downTo 1) {

if (i == 5) {

// current price

priceRepo.save(ProductPrice(sku = p1.sku, price = i.toDouble()))

priceRepo.save(ProductPrice(sku = p2.sku, price = (i * 2).toDouble()))

// current price history

h1.prices.add(PriceEntry(price = i.toDouble()))

h2.prices.add(PriceEntry(price = (i * 2).toDouble()))

} else {

// previous price

val expiredDate = Date(ZonedDateTime.now()

.minusMonths(i.toLong())

.toInstant()

.toEpochMilli())

h1.prices.add(PriceEntry(price = i.toDouble(), expired = expiredDate))

h2.prices.add(PriceEntry(price = (i * 2).toDouble(), expired = expiredDate))

}

}

priceHistoryRepo.saveAll(listOf(h1, h2))

}

}How do we check then that the data has been saved to the database? Since it’s a web application, we’ll expose the data in the REST controller.

@RestController

@RequestMapping("/api")

class ProductResource(

val productRepo: ProductRepository,

val priceRepo: ProductPriceRepository,

val priceHistoryRepo: PriceHistoryRepository

) {

@GetMapping("/product")

fun getProducts(): List<Product> = productRepo.findAll()

@GetMapping("/price")

fun getPrices(): List<ProductPrice> = priceRepo.findAll()

@GetMapping("/priceHistory")

fun getPricesHistory(): List<PriceHistory> = priceHistoryRepo.findAll()

}The REST controller is just using our repos to call the findAll() method. We aren’t doing anything with the data transformations, we aren’t paging or sorting, we just want to see that something is there. Finally, it’s possible to start the application and see what happens.

[

{

"id": "5d5e64d80a986d381a8af4ce",

"name": "Toy Horse",

"sku": "123"

},

{

"id": "5d5e64d80a986d381a8af4cf",

"name": "Real Horse",

"sku": "456"

}

]Yay, there’s two products we created! We can see that Mongo assigned autogenerated IDs to them on save — we have only defined the names and dummy SKU codes.

We can also check the data at http://localhost:8080/api/price and http://localhost:8080/api/priceHistory and make sure that yes, actually, those entities have indeed been created too. I won’t paste this JSON here as it’s not really relevant.

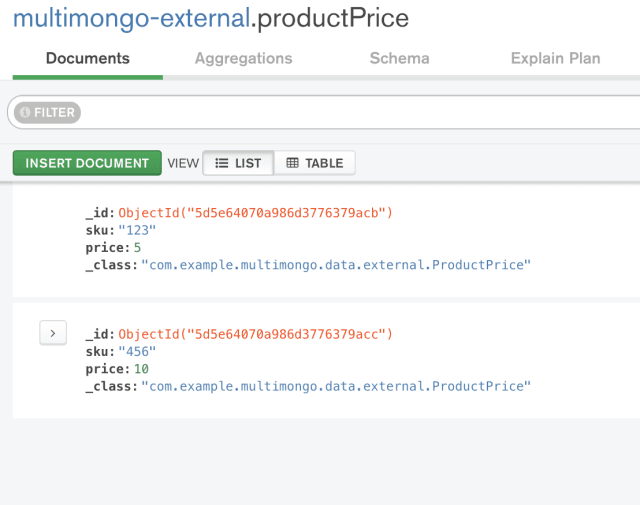

However, how do we make sure that the data has really been saved to (and read from) different databases? For that, we can just use any mongo client application that allows us to connect to the local mongo instance (I am using the official tool from mongo — MongoDB Compass).

Let’s check the content in the database that’s holding our current prices.

We can also use an integration test to check the data instead of doing it manually if we want to do everything right (actually not everything — we’d need to use the embedded mongo database for the tests, but we’ll skip this part here to not make the tutorial too complicated). We’ll utilize the MockMvc from spring-test library for this purpose.

<

@RunWith(SpringRunner::class)

@SpringBootTest

class MultimongoApplicationTests {

@Autowired

private val productRepo: ProductRepository? = null

@Autowired

private val priceRepo: ProductPriceRepository? = null

@Autowired

private val priceHistoryRepo: PriceHistoryRepository? = null

@Autowired

private val initializer: DataInitializer? = null

@Autowired

private val context: ApplicationContext? = null

private var mvc: MockMvc? = null

@Before

fun setUp() {

val resource = ProductResource(

productRepo!!,

priceRepo!!,

priceHistoryRepo!!

)

this.mvc = MockMvcBuilders

.standaloneSetup(resource)

.build()

initializer!!.onApplicationEvent(ContextStartedEvent(context!!))

}

@Test

fun productsCreated() {

mvc!!.perform(get(“/api/product”))

.andExpect(status().isOk)

.andDo {

println(it.response.contentAsString)

}

.andExpect(jsonPath(“$.[*].sku”).isArray)

.andExpect(jsonPath(“$.[*].sku”)

.value(hasItems(“123”, “456”)))

}

@Test

fun pricesCreated() {

mvc!!.perform(get(“/api/price”))

.andExpect(status().isOk)

.andDo {

println(it.response.contentAsString)

}

.andExpect(jsonPath(“$.[*].sku”).isArray)

.andExpect(jsonPath(“$.[*].sku”)

.value(hasItems(“123”, “456”)))

.andExpect(jsonPath(“$.[0].price”)

.value(5.0))

.andExpect(jsonPath(“$.[1].price”)

.value(10.0))

}

@Test

fun pricesHistoryCreated() {

mvc!!.perform(get(“/api/priceHistory”))

.andExpect(status().isOk)

.andDo {

println(it.response.contentAsString)

}

.andExpect(jsonPath(“$.[*].sku”).isArray)

.andExpect(jsonPath(“$.[*].sku”)

.value(hasItems(“123”, “456”)))

.andExpect(jsonPath(“$.[0].prices.[*].price”)

.value(hasItems(5.0, 4.0, 3.0, 2.0, 1.0)))

.andExpect(jsonPath(“$.[1].prices.[*].price”)

.value(hasItems(10.0, 8.0, 6.0, 4.0, 2.0)))

}

}You can find the full working example here in my github repo. Hope this helped you solve the issue of using several mongo instances in one Spring Boot web application! It’s not such a difficult problem, but also not quite trivial.

When I was looking at the other examples on the web, I also read this article (called Spring Data Configuration: Multiple Mongo Databases by Azadi Bogolubov) and it was pretty good and comprehensive. However, it didn’t quite fit my case because it was overriding the automatic mongo configuration completely. I, on the other hand, wanted to still keep it for my default database, but not for the others. But the approach in that article is based on the same principle of using different mongo templates for scanning different repositories.

It’s just that, with the default configuration, you can easily get rid of extra classes once something changes for example and all your data goes to the same database again.

Then you could easily cleanup the non-default configurations but still keep the default one and only change the scope that it’s scanning. The application would still continue to work without a hitch. But both ways are completely working and valid.

This article is also published on Medium here.

Published on Java Code Geeks with permission by Maryna Cherniavska, partner at our JCG program. See the original article here: How-To: Spring Boot 2 Web Application with Multiple Mongo Repositories and Kotlin Opinions expressed by Java Code Geeks contributors are their own. |