IDE approach to log analysis pt. 1

Intro

I think most software engineers understand the importance of logs. They have become part of software development. If something doesn’t work we try to find the cause in the logs. This could be enough for simple cases when a bug prevents an application from opening a window. You find the issue in the logs, look it up on Google and apply the solution. But if you are fixing bugs in a large product with many components analyzing logs becomes the main problem. Usually sustain engineers (who are fixing bugs not developing new features) need to work with many hundreds of megabytes of logs. The logs are usually split into separate files 50-100 MB each and zipped.

There are several approaches to make this work easier. I’ll describe some existing solutions and then explain a theoretical approach to this problem. This blog post will not discuss any concrete implementations.

Existing Solutions

Text Editor

This solution is not actually a solution it is what most people would do when they need to read a text file. Some text editors could have useful features like color selection, bookmarks which can make the work easier. But still the text editor falls short of a decent solution.

Logsaw

This tool can use the log4j pattern to extract the fields from your logs. Sounds good but these fields are already obvious from the text. Clearly the improvement is insignificant over a simple text editor.

LogStash

This project looks pretty alive. But this approach is quite specific. Even though I’ve never worked with this tool from the description I understood that they use ElasticSearch and simple text search to analyze logs. The logs must be uploaded somewhere and indexed. After that the tool may show the most common words, the user may use text search etc. Sounds good, seems to be some improvement. Unfortunately not so much. Here are the cons:

- Some time is required to begin working with the logs. One has to upload them, index them. After the work is done these logs must be removed from the system. Looks like a little overkill if the logs are meant to be analyzed and discarded.

- A lot of components involved with a lot of configuration necessary.

- Full text search is not very useful with logs. Usually the engineer is looking for something like “connection 2345 created with parameter 678678678”. Looking for “created with parameter” will return all connections. Looking for “connection 2345” will return all such statements but usually there is only one – when this connection was created.

Other Cloud-based Solutions

There are a lot of cloud-based solutions available. Most of them have commercial plans and some have free plans. They offer notifications, visualizations and other features but the main principles are the same as for LogStash.

Log Analysis Explained

To understand why these solutions do not perform well for analyzing complex issues we need to try to understand the workflow. Here is a sample workflow with the text editor:

- An engineer received 1 GB of logs with the information that the bug happened at 23:00 with request ID 12345.

- First he or she tries to find any errors or exceptions around that time.

- If that fails the engineer has to reconstruct the flow of events for this request. He or she begins looking for statements like “connection created”, “connection deleted”, “request moved to this stage” trying to narrow down the time frame for the issue.

- That is usually successful (even though could take a lot of time) now it is clear that the issue happened after connection 111 was moved to state Q.

- After digging a little more the engineer finds out that this coincides with connection 222 moving to state W.

- Finally the engineer is delighted to see that the thread that moved connection 222 to the new state also modified another variable that affected connection 111. Finally the root cause.

In this workflow we see that the engineer most of the time is looking for standard strings with some parameters. If only it could be simplified…

IDE approach

There are several parts to the IDE approach.

- Regular expressions. With regular expressions one can specify the template and search for it in the logs. Looking for standard strings is much simpler with regular expressions.

- Regular Expressions Configuration. The idea here is that standard strings like “connection created \d{5}\w{2}”, “connection \d{5}\w{2} moved to stage \w{7}”, “connection\d{5}\w{2} deleted” do not change often. Writing the regular expression to find it every time is unwieldy because such regexes could be really long and complicated. It is easier if they can be configured and used by clicking on a button.

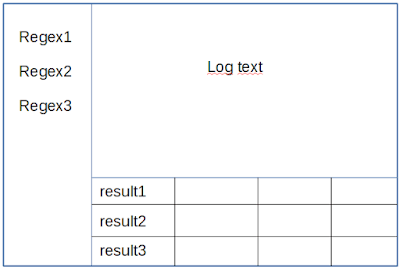

- IDE. We need some kind of an IDE to unite this together. To read the configuration, show the logs files and stored regexes, display the text and search results. Preferably like this:

- Color features. From experience I know that log analysis is much easier when you can mark some strings with color to easily see it in the logs. Most commercial log analyzer tools use color selection. The IDE should help with that.

Pros and Cons

Pros of the IDE approach:

- No cloud service necessary. No loading gigabytes of logs somewhere, no cloud configuration. One only has to open the IDE for logs, open the log folder and start analyzing.

- If the IDE is free the whole process is completely free. Anyway should be cheaper than a log service.

Cons of the IDE approach:

- Most cloud services offer real-time notifications and log analysis “on the fly”. It means as soon as the specified exception happens the user is notified. The IDE approach cannot do that.

- The requirements for the user’s PC are somewhat higher because working with big strings in Java consumes a lot of memory. 8 GB is the minimum requirement from my experience.

The bottom line is the IDE approach is suitable to analyze complicated issues in the logs. It cannot offer real-time features of cloud services but should be much cheaper and easier for analyzing and fixing bugs.

Final Thoughts

It would be great if someone could implement this great approach! I mean create this IDE with all those features and make log analysis easier for everyone! I know from experience that this could be a tedious work that feels harder than it actually is. In the next post (part 2) I’ll explain the difficulties/challenges with this approach and offer a working implementation based on the Eclipse framework.

| Published on Java Code Geeks with permission by Vadim Korkin, partner at our JCG program. See the original article here: IDE approach to log analysis pt. 1 Opinions expressed by Java Code Geeks contributors are their own. |

I am now making AI to analyze its own logfiles :)

Interesting approach and would work well for a single logfile. However, I feel the approach seems naive and possible alot of effort would be required in a microservices world, where you have multiple log files to analyse and interact with. I think this is where software like Kibana and Splunk really add value.

I would argue against the “naive” definition. This approach worked well for me with many log files (gigabytes of them each one zipped). I used this approach for a commercial project (not exactly old and not exactly new) where we didn’t have cloud services (it was 4 years ago).

With one log file Notepad++ would have been enough.

Regarding Kibana I understand there is a lot of hype around these services. They offer wonderful visualizations and look great. They seem to make this work easier. But do they really help to analyze complex issues?