Comparing SQL Functions and Performance with Apache Spark and Apache Drill

SQL engines for Hadoop differ in their approach and functionality. My focus for this blog post is to compare and contrast the functions and performance of Apache Spark and Apache Drill and discuss their expected use cases.

Running queries and analysis on structured databases is a standard operation and has been in place for decades. This typically involves developing a structure and schema which gets implemented in a database.

Although the data handled by companies is increasing every year, the pace of this increase is of a completely different magnitude. According to a recent investigation, there are presently 3.5ZB (zetta bytes) of structured data, with 1.4 times more semi-structured and unstructured data, totaling 5ZB. By 2020, however, it is predicted that there will be roughly 6 times more semi-structured and unstructured data than structured data, totaling 30ZB to 5ZB, respectively.1

Figuring out how to analyze this growing amount of data is a key objective for business and data analysts. This is because, along with the multiplicity of data types contained in unstructured data, the data’s actual meanings (namely the words contained in word documents, video frames, etc.) are likely to depend on the actual semantics (context) of the things being analyzed. In addition to the semantics on which the data itself relies, there might also be a need to understand the data path included in that data as a part of the semantics. (For example, the data paths: “C:\Users\kiuchi\My Pets\whale\johnny.jpg” and “http://kiuchi.local/My Pets/cat/michelle.jpg” do not only lead to the image which they are referencing; they can also lead us to believe that I have a whale and a cat among my pets. Essentially, it may be possible to interpret data paths as their nested columns.)

In addition, the increase of information content contained in “semi-structured data” in lighter data exchange formats such as XML and JSON, which are inspired by the development of information sharing over the internet, has made the situation even more complicated. While this semi-structured data, because of its flexible schema structure, does not fit into data stores that strictly define schema beforehand, such as relational databases, compared to data such as emails or office documents, it can nonetheless clearly be said to be structured data. The problem of how to store and analyze such semi-structured data is currently one of the major issues faced by analytics professionals.

Among my readers, I suspect that not many people know the expression “Polyglot Persistence.” This is a concept which was originally developed by Scott Leberknight, and which was brought into the limelight in 2011 when it was taken up by Martin Fowler, an advocate of XP (eXtreme Programming). It advocates for the cross-cutting accessing of data stored in a wide variety of formats on various data sources.2 Should it be achieved, data analysts will be able to grasp the location and format of the data that they are trying to analyze, thus leading to a dramatic reduction in data conversion costs.

In other words, we can say that for big data analytics, which has recently gained a lot of exposure as a buzzword, solving the problem of handling wide varieties of data remains an ongoing effort. Both Apache Drill and Apache Spark are execution engines for Hadoop that were born under these circumstances. By comparing the functions and performance of Apache Drill and Apache Spark, this article will explore their characteristics and expected use cases.

Functional Comparison

As a data source, Apache Drill and Apache Spark support semi-structured data such as CSV and JSON, as well as relational databases through JDBC. Apache Drill also supports NoSQL databases such as MongoDB ,and HBase.

| Supported Data Sources | |

| Apache Drill | CSV, JSON, Parquet Hive, HBase, MongoDB, MapR- DB, Amazon S3 |

| Apache Spark | CSV, JSON, Parquet HiveQL (compatible), JDBC |

One of the defining characteristics is the fact that they are able to analyze a mix of structured data sources and semi-structured data sources. This sets them apart from traditional SQL engines. In addition, they also have means to access local files, remote data sources that support standard database connection protocols, and data sources on the internet which require connection methods other than JDBC. Finally, it should also be mentioned that both have “plugin” features, meaning that additional data sources can be added.3

Clearly, these are the designs that presuppose a wide variety of data sources and a cross-cutting data analysis. They are good representatives of next-generation SQL engines operating in a big data environment with mixed data sources taking “Polyglot Persistence” into account.

Performance Comparison and Discussion

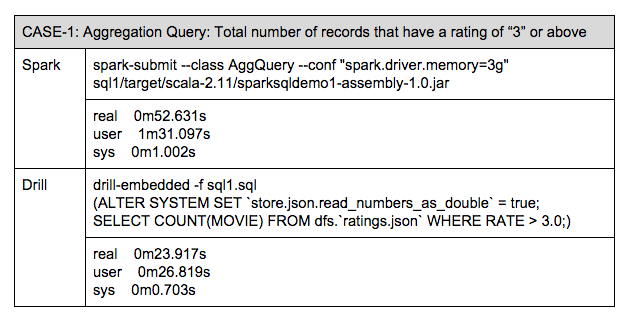

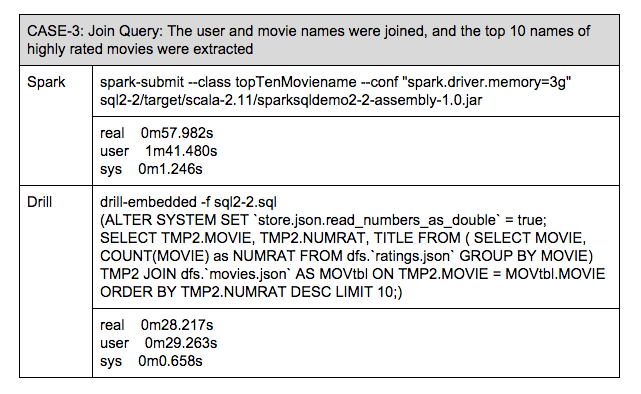

Let me now compare the query performance of the two technologies. Here, we will use the “MovieLens” data set, which consists of a ratings database from movie viewers collected by a research project at the University of Minnesota. Performance will be measured by running three types of SQL queries, including JOIN access.

- The program used here is available at GitHub(m-kiuchi/MovieLensSQL)

The results below are those that were obtained on my PC (2 cores, 6GB memory).

Where did the differences in results come from? I believe that they originated from the differences in the ideas behind Apache Spark and Apache Drill. Since Apache Drill has been developed in order to scan and filter data sources, it is likely that it does not have any extra processing. As it is scanned, a piece of data is sent to the next step of pipeline processing, with the focus being on obtaining the final results in the shortest time possible. Since it assumes that query processing completes in a short time, the process is re-run if a failure occurs during execution. On the other hand, the data source scanning in Spark is only positioned as the preliminary step for parallel processing. As such, since reusability is what is aimed for, partitioning and staging takes place in each step like MapReduce. This allows for more flexible control of the data flows in the program. In case of failure during long batch processing periods, processing can be restarted using the intermediate data, giving it increased fault tolerance. This is the likely cause of the differences in performance. Of course, if sufficient machine resources are available, or if we think of the entirety of the workflow processing time, the differences in these observed processing time might end up falling within the margin of error.

The intent here is not to make a determination of whether Apache Drill or Apache Spark is better based on the above results, whether Apache Drill or Apache Spark is better. What is important is the fact that both these engines are able to execute queries on and transparently capture data from a wide variety of data sources, with Spark being able to conduct more varied processing than SQL queries. What is clear and evident is the fact that what these two execution engines can achieve clearly goes beyond the scope of traditional relational databases.

- 1http://wikibon.org/wiki/v/The_Growth_and_Management_of_Unstructured_Data

- 2 There is a similar concept called “Data Virtualization”

- 3https://drill.apache.org/docs/storage-plugin-registration/, http://spark-packages.org/

| Reference: | Comparing SQL Functions and Performance with Apache Spark and Apache Drill from our JCG partner Mitsutoshi Kiuchi at the Mapr blog. |