AWS Lambda: An Introduction

Many parts of a modern infrastructure are inherently event-driven or can be represented with an event-driven model. For example, we want to send welcome emails for new signups, scale our systems up or down whenever certain load metrics are hit, or send out notifications to the engineering team when new admin accounts are created for our system. All these tasks come with operational overhead.

Managing and reacting to all those events would require a complex infrastructure, so often we simply put them together in one controller action or use observers that run in the same processes as our applications. This makes the codebase more complex as parts start getting interwoven.

Most teams start pushing those tasks into background workers, but the infrastructure necessary for managing tasks this way is overhead too. Therefore, background workers are typically limited to the most important tasks. This is especially true when they don’t get automatically triggered by events, but need to be triggered through the codebase. Doing this adds another level of complexity to the code in order to understand which part triggers which event.

As the largest cloud infrastructure provider, AWS must have heard calls to fix this issue repeatedly. So at AWS reInvent they’ve launched AWS Lambda.

AWS Lambda combines a robust event infrastructure with a simple deployment model. It lets you write small NodeJS functions that will be called with the event metadata from events triggered by various services or through your own code. Support for more languages will be coming in the future. In addition they’ve also laid out their view on event-driven infrastructure at AWS reInvent in one of the talks.

AWS Lambda, the basics

Lambda is currently in beta preview, but available for everyone to try without any further signup. As mentioned before it supports node natively, but you can also execute any binary through Node as explained in this blog post. It’s priced for every 100 milliseconds of execution with different tiers depending on the RAM size you allocate for the function.

Deploying to Lambda is as simple as zipping up all your code and calling an api function or cli command and pushing the zip file to Lambda. This allows for arbitrary files to be available while running your Lambda function. Lambda then starts the node VM and calls the node function. For every subsequent request the same instance is used unless no function is executed for a while. You can read more about the details in a recent blog post by Tim Wagner, the Lambda GM.

Event sources

Currently AWS supports S3 events, Kinesis Streams or the recently released DynamoDB Streams, and of course, triggering your own events by hand. This already allows for a wide range of applications to take advantage of Lambda. As mentioned in their presentation and following QA, SQS and SNS are natural extensions of this event model.

An example

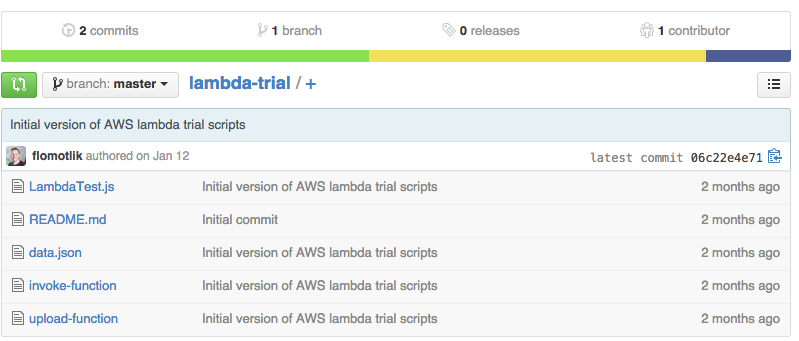

I’ve implemented a very simple Hello World example to get you started. As prerequisites you need to have the awscli installed locally and need to be authenticated with full admin access locally. Follow the Lambda setup in the Lambda console to create an IAM execution Role as well.

The repository contains a script to upload and invoke the Lambda function which will print a simple Hello World.

Anders Janmyr recently released a blog post with more examples.

Events change how we build infrastructure

By giving us the infrastructure to easily react to events we can now solve complex problems by combining simple components. Imagine a scaling problem:

Request time for a particular piece of infrastructure gets too high and an event is triggered. A Lambda function reacting to the event starts a new server from the right AMI. Starting the server triggers a server-created event. Another Lambda function is called whenever a new server starts that checks that the new server is in a vpc and ports are all properly closed. After server started and health checks happened another event fires and we put that new server into an ELB.

By chaining those functions they each solve one particular problem, but combined they target a larger workflow. They can be combined in various ways in the future as well.

Other examples are sending out welcome emails whenever a new customer joins and is created in the database or automatically checking any new IAM User/Group/Role for excessive permissions. By reacting to DynamoDB table changes or potentially other events from database systems, we can use Lambda as one of our main tools for building out our application. This moves many of the scaling challenges to Lambda and makes sure we can focus on building our product.

How we could use it in the future

Codeship is an inherently event-driven system. We can see many different ways to include Lambda in our infrastructure that will be of interest to others too. From simple background tasks to scaling and health checks it allows for a wide variety of use cases.

One of the most interesting points made by the Lambda team is the potential of sharing functions. Due to its standard event model it would be possible in the future to easily share code for common operations. Sharing would further reduce code we have to write and make infrastructure even simpler.

Staying on top of the functions

Managing dozens of small services and functions becomes complex very quickly. You want to keep an overview over what’s happening in your infrastructure and which event triggers which function. By having a single, managed point of entry AWS can build a nice UI, as can external teams reading from the AWS API.

We’ve moved complexity from building and managing the lower level infrastructure to staying on top of it. This is an inherently better problem to have. It’s now not just our problem but everyone’s: ours, AWS’s and everyone’s who is using Lambda. That creates enough incentives to collectively solve this problem well.

Imagine a system that tracks all interactions that a Lambda function does and is therefore able to trace any function chain throughout all of your infrastructure and to show you the dependencies between functions. While this is still fiction, AWS has every incentive to make sure we have great insights into what’s happening in our infrastructure with Lambda.

Conclusion

AWS Lambda is one more abstraction that lowers the necessity of what we have to build and maintain to the absolute minimum. By giving us a scalable, event-based infrastructure we can split up tasks that we might not have wanted to split previously because of operational complexity.

“AWS Lambda is one more abstraction that lowers the necessity of what we have to build.” @codeship

We can capture many tasks done in one monolithic application today through many distinct Lambda functions. Doing so will help build a service-oriented architecture without the additional operational overhead. Lambda also takes care of the messaging layer between services which reduces the complexity of another piece in the service-oriented architecture puzzle.

We’re currently working with the AWS Lambda Team to build an integration with Codeship to make getting started with Lambda even easier. Sign up for the Preview and give Lambda a try. It’s going to be a big part of building on AWS in the future.

| Reference: | AWS Lambda: An Introduction from our JCG partner Florian Motlik at the Codeship Blog blog. |

Another great feature aws Lambda provides is building serverless REST services, this sample service uses Lambda, API Gateway and DynamoDB to build REST service in Java.