GPGPU Java Programming

Before we dive into coding some background. There are two competing GPGPU SDKs: OpenCL and CUDA. OpenCL is an open standard supported by all GPU vendors (namely AMD, NVIDIA and Intel), while CUDA is NVIDIA specific and will work only on NVIDIA cards. Both SDKs support C/C++ code which of course leaves us Java developers in the cold. So far there is no pure java OpenCL or CUDA support. This is not much help for the Java programmer who needs to take advantage of a GPUs massive parallelism potential unless she fiddles with Java Native interface. Of course there are some Java tools out there that ease the pain of GPGPU Java programming.

The two most popular (IMHO) are jocl and jcuda. With these tools you still have to write C/C++ code but at least that would be only for the code that will be executed in the GPU, minimizing the effort considerably.

This time I will take a look at jcuda and see how we can write a simple GPGPU program.

Let’s start by setting up a CUDA GPGPU linux development environment (although Windows and Mac environments shouldn’t be hard to setup either):

Step 1: Install an NVIDIA CUDA enabled GPU in your computer. The NVIDIA Developers‘ site has a list of CUDA enabled GPUs. New NVIDIA GPUs are almost certainly CUDA enabled but just in case check on the card’s specification to make sure…

Step 2: Install the NVIDIA Driver and CUDA SDK. Download them and find installation instructions from here.

Step 3: Go to directory ~/NVIDIA_GPU_Computing_SDK/C/src/deviceQuery and run make.

Step 4: If the compilation was successful go to directory ~/NVIDIA_GPU_Computing_SDK/C/bin/linux/release and run the file deviceQuery. You will get lots of technical information about your card.

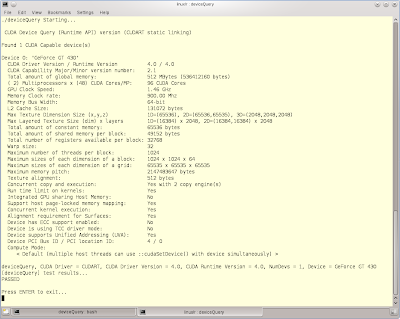

Here is what I got for my GeForce GT 430 card:

Notice the 2 Multiprocessors with 48 CUDA Cores each totaling 96 cores, not bad for a low end video card worth around 40 euros!!!!

Step 5: Now that you have a CUDA environment let’s write and compile a CUDA program in C. Write the following code and save it as multiply.cu

#include <iostream>

__global__ void multiply(float a, float b, float* c)

{

*c=a*b;

}

int main()

{

float a, b, c;

float *c_pointer;

a=1.35;

b=2.5;

cudaMalloc((void**)&c_pointer, sizeof(float));

multiply<<<1,1>>>(a, b, c_pointer);

cudaMemcpy(&c, c_pointer, sizeof(float),cudaMemcpyDeviceToHost);

/*** This is C!!! You manage your garbage on your own! ***/

cudaFree(c_pointer);

printf("Result = %f\n",c);

}

Compile it using the cuda compiler and run it:

$ nvcc multiply.cu -o multiply $ ./multiply Result = 3.375000 $

So what does the above code do? The multiply function with the __global__ qualifier is called the kernel and is the actual code that will be executed in the GPU. The code in the main function is executed in the CPU as normal C code although there are some semantic differences:

- The multiply function is called with the <<<1,1>>> brackets. The two numbers inside the brackets tell CUDA how many times the code should be executed. CUDA enables us to create what are called one, two, or even three-dimensional thread blocks. The numbers in this example indicate a single thread block running in one dimension, thus our code will be executed 1×1=1 time.

- The cudaMalloc, cudaMemcpy, and cudaFree functions are used to handle the GPU memory in a similar fashion as we handle the computer’s normal memory in C. The cudaMemcpy function is important since GPU has its own RAM and before we can process any data in the kernel we need to load them to GPU memory. Of course we also need to copy the results back to normal memory when done.

Now that we got the basics of how to execute code in the GPU let’s see how we can run GPGPU code from Java. Remember the kernel code will still be written in C but at least the main function is now java code with the help of jcuda.

Download the jcuda binaries, unzip them and make sure that the directory containing the .so files (or .dll for Windows) is either given in the java.library.path parameter of the JVM or you append it in the LD_LIBRARY_PATH environment variable (or your PATH variable in windows). Similarly the jcuda-xxxxxxx.jar file must be in your classpath during compilation and execution of your java program.

So now that we have jcuda setup let’s have a look at our jcuda-compatible kernel:

extern "C"

__global__ void multiply(float *a, float *b, float *c)

/*************** Kernel Code **************/

{

c[0]= a[0] * b[0];

}

You will notice the following differences from the previous kernel method:

- We use the extern “C” qualifier to tell the compiler not to mingle the multiply method name so we can call it with its original name.

- We use arrays instead of primitives for a, b and c. This is required by jcuda since Java primitives are not supported by jcuda. In jcuda data are passed back and forth to the GPU as arrays of things such as floats, integers etc.

Save this file as multiply2.cu. This time we don’t want to compile the file as an executable but rather as a CUDA library that will be called within our java program. We can compile our kernel either as a PTX file or a CUBIN file. PTX are human readable files containing an assembly like code that will be compiled on the fly. CUBIN files are compiled CUda BINaries and can be called directly without on the fly compilation. Unless you need optimal start-up performance, PTX files are preferable because they are not tied up to the specific Compute Capability of the GPU they were compiled with, while CUBIN files will not run on GPUs with lesser compute capability.

In order to compile our kernel type the following:

$ nvcc -ptx multiply2.cu -o multiply2.ptx

Having successfully created our PTX file let’s have a look at the java equivalent of the main method we used in our C example:

import static jcuda.driver.JCudaDriver.*;

import jcuda.*;

import jcuda.driver.*;

import jcuda.runtime.JCuda;

public class MultiplyJ {

public static void main(String[] args) {

float[] a = new float[] {(float)1.35};

float[] b = new float[] {(float)2.5};

float[] c = new float[1];

cuInit(0);

CUcontext pctx = new CUcontext();

CUdevice dev = new CUdevice();

cuDeviceGet(dev, 0);

cuCtxCreate(pctx, 0, dev);

CUmodule module = new CUmodule();

cuModuleLoad(module, "multiply2.ptx");

CUfunction function = new CUfunction();

cuModuleGetFunction(function, module, "multiply");

CUdeviceptr a_dev = new CUdeviceptr();

cuMemAlloc(a_dev, Sizeof.FLOAT);

cuMemcpyHtoD(a_dev, Pointer.to(a), Sizeof.FLOAT);

CUdeviceptr b_dev = new CUdeviceptr();

cuMemAlloc(b_dev, Sizeof.FLOAT);

cuMemcpyHtoD(b_dev, Pointer.to(b), Sizeof.FLOAT);

CUdeviceptr c_dev = new CUdeviceptr();

cuMemAlloc(c_dev, Sizeof.FLOAT);

Pointer kernelParameters = Pointer.to(

Pointer.to(a_dev),

Pointer.to(b_dev),

Pointer.to(c_dev)

);

cuLaunchKernel(function, 1, 1, 1, 1, 1, 1, 0, null, kernelParameters, null);

cuMemcpyDtoH(Pointer.to(c), c_dev, Sizeof.FLOAT);

JCuda.cudaFree(a_dev);

JCuda.cudaFree(b_dev);

JCuda.cudaFree(c_dev);

System.out.println("Result = "+c[0]);

}

}

OK that looks like a lot of code for just multiplying two numbers, but remember there are limitations regarding Java and C pointers. So starting with lines 9 through 11 we convert our a,b,and c parameters into arrays named a, b, and c each containing only one float number.

In lines 13 to 17 we tell jcuda that we will be using the first GPU in our system (it is possible to have more than one GPUs in high end systems.)

In lines 19 to 22 we tell jcuda were our PTX file is and the name of the kernel method we would like to use (in our case multiply.)

Things get interesting in line 24, where we use a special jcuda class the CUdeviceptr which acts as a pointer placeholder. In line 25 we use the CUdeviceptr pointer we just created to allocate GPU memory. Note that if our array had more than one items we would need to multiple the Sizeof.FLOAT constant with the number of elements in our array. Finally in line 26 we copy the contents of our first array to the GPU. Similarly we create our pointer and copy the contents to the GPU RAM for our second array (b). For our output array (c) we only need to allocate GPU memory for now.

In line 35 we create a Pointer object that will hold all the parameters we want to pass to our multiply method.

We execute our kernel code in line 41 where we execute the utility method cuKernelLaunch passing the function and pointer classes as parameters. The first six parameters after the function parameter define the number of grids (a grid is a group of blocks) and blocks which in our example are all 1 as we will execute the kernel only once. The next two parameters are 0 and null and used for identifying any shared memory (memory that can be shared among threads) we may have defined, in our case none. The next parameter contains the Pointer object we created containing our a,b,c device pointers and the last parameter is for additional options.

After our kernel returns we simply copy the contents of dev_c to our c array, free all the memory we allocated in the GPU and print the result stored in c[0], which is of course the same as with our C example.

Here is how we compile and execute the MultiplyJ.java program (assuming the multiply2.ptx is in the same directory):

$ javac -cp ~/GPGPU/jcuda/JCuda-All-0.4.0-beta1-bin-linux-x86_64/jcuda-0.4.0-beta1.jar MultiplyJ.java $ java -cp ~/GPGPU/jcuda/JCuda-All-0.4.0-beta1-bin-linux-x86_64/jcuda-0.4.0-beta1.jar:. MultiplyJ Result = 3.375 $

Note that in this example the directory ~/GPGPU/jcuda/JCuda-All-0.4.0-beta1-bin-linux-x86_64 is already in my LD_LIBRARY_PATH so I don’t need to set the java.library.path parameter on the JVM.

Hopefully by now the mechanics of jcuda are clear, although we didn’t really touched on the GPU’s true power which is massive parallelism. In a future article I will provide an example on how to run parallel threads in CUDA using java accompanied with an example of what NOT to run in the GPU. A GPU processing makes sense for very specialized tasks and most tasks should be better left to be processed by our old and trusted CPU.

Reference: GPGPU Java Programming from our W4G partner Spyros Sakellariou.

Related Articles :