Making the Reactive Queue durable with Akka Persistence

Some time ago I wrote how to implement a reactive message queue with Akka Streams. The queue supports streaming send and receive operations with back-pressure, but has one downside: all messages are stored in-memory, and hence in case of a restart are lost.

But this can be easily solved with the experimental akka-persistence module, which just got an update in Akka 2.3.4.

Queue actor refresher

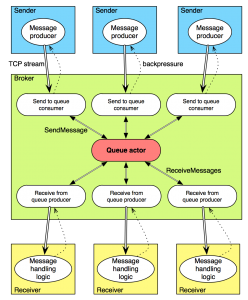

To make the queue durable, we only need to change the queue actor; the reactive/streaming parts remain intact. Just as a reminder, the reactive queue consists of:

- a single queue actor, which holds an internal priority queue of messages to be delivered. The queue actor accepts actor-messages to send, receive and delete queue-messages

- a Broker, which creates the queue actor, listens for connections from senders and receivers, and creates the reactive streams when a connection is established

- a Sender, which sends messages to the queue (for testing, one message each second). Multiple senders can be started. Messages are sent only if they can be accepted (back-pressure from the broker)

- a Receiver, which receives messages from queue, as they become available and as they can be processed (back-pressure from the receiver).

Going persistent (remaining reactive)

The changes needed are quite minimal.

First of all, the QueueActor needs to extend PersistentActor, and define two methods:

receiveCommand, which defines the “normal” behaviour when actor-messages (commands) arrivereceiveRecover, which is used during recovery only, and where replayed events are sent

But in order to recover, we first need to persist some events! This should of course be done when handling the message queue operations.

For example, when sending a message, a MessageAdded event is persisted using persistAsync:

def handleQueueMsg: Receive = {

case SendMessage(content) =>

val msg = sendMessage(content)

persistAsync(msg.toMessageAdded) { msgAdded =>

sender() ! SentMessage(msgAdded.id)

tryReply()

}

// ...

}persistAsync is one way of persisting events using akka-persistence. The other, persist (which is also the default one), buffers subsequent commands (actor-messages) until the event is persisted; this is a bit slower, but also easier to reason about and remain consistent. However in case of the message queue such behaviour isn’t necessary. The only guarantee that we need is that the message send is acknowledged only after the event is persisted; and that’s why the reply is sent in the after-persist event handler. You can read more about persistAsync in the docs.

Similarly, events are persisted for other commands (actor-messages, see QueueActorReceive). Both for deletes and receives we are using persistAsync, as the queue aims to provide an at-least-once delivery guarantee.

The final component is the recovery handler, which is defined in QueueActorRecover (and then used in QueueActor). Recovery is quite simple: the events correspond to adding a new message, updating the “next delivery” timestamp or deleting.

The internal representation uses both a priority queue and a by-id map for efficiency, so when the events are handled during recovert we only build the map, and use the RecoveryCompleted special event to build the queue as well. The special event is sent by akka-persistence automatically.

And that’s all! If you now run the broker, send some messages, stop the broker, start it again, you’ll see that the messages are recovered, and indeed, they get received if a receiver is run.

The code isn’t production-ready of course. The event log is going to constantly grow, so it would certainly make sense to make use of snapshots, plus delete old events/snapshots to make the storage size small and recovery fast.

Replication

Now that the queue is durable, we can also have a replicated persistent queue almost for free: we simply need to use a different journal plugin! The default one relies on LevelDB and writes data to the local disk. Other implementations are available: for Cassandra, HBase, and Mongo.

Making a simple switch of the persistence backend we can have our messages replicated across a cluster.

Summary

With the help of two experimental Akka modules, reactive streams and persistence, we have been able to implement a durable, reactive queue with a quite minimal amount of code. And that’s just the beginning, as the two technologies are only starting to mature!

- If you’d like to modify/fork the code, it is available on Github.

| Reference: | Making the Reactive Queue durable with Akka Persistence from our JCG partner Adam Warski at the Blog of Adam Warski blog. |