Guidance for Building a Control Plane for Envoy Part 3 – Domain Specific Configuration API

This is part 3 of a series that explores building a control plane for Envoy Proxy.

In this blog series, we’ll take a look at the following areas:

- Adopting a mechanism to dynamically update Envoy’s routing, service discovery, and other configuration

- Identifying what components make up your control plane, including backing stores, service discovery APIs, security components, et. al.

- Establishing any domain-specific configuration objects and APIs that best fit your usecases and organization (this entry)

- Thinking of how best to make your control plane pluggable where you need it

- Options for deploying your various control-plane components

- Thinking through a testing harness for your control plane

In the previous entry we evaluated the components you may need for your control plane. In this section, we explore what a domain-specific API might look like for your control plane.

Establishing your control-plane interaction points and API surface

Once you’ve thought through what components might make up your control-plane architecture (see previous), you’ll want to consider exactly how your users will interact with the control plane and maybe even more importantly, who will your users be? To answer this you’ll have to decide what roles your Envoy-based infrastructure will play and how traffic will traverse your architecture. It could be a combination of

- API Management gateway (north/south)

- Simple Kubernetes edge load balancer / reverse proxy / ingress control (north/south)

- Shared services proxy (east/west)

- Per-service sidecar (east/west)

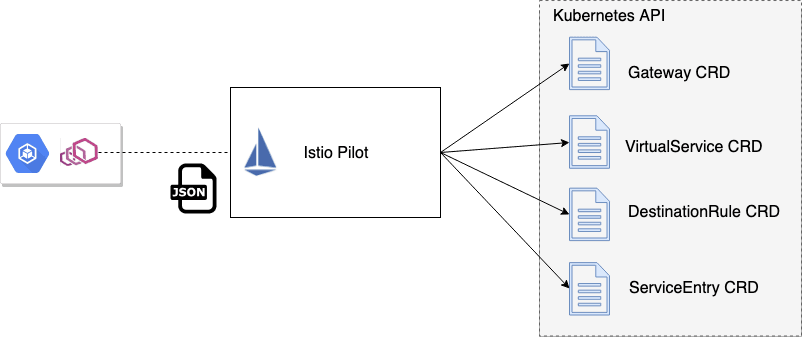

For example, the Istio project is intended to be a platform service mesh that platform operators can build tools upon to drive control of the network between your services and applications. Istio’s domain-specific configuration objects for configuring Envoy center around the following objects:

- Gateway – define a shared proxy component (capable of cluster ingress) that specifies protocol, TLS, port, and host/authority that can be used to load balance and route traffic

- VirtualService – rules for how to interact with a specific service; can specify things like route matching behavior, timeouts, retries, etc

- DestinationRule – rules for how to interact with a specific service in terms of circuit breaking, load balancing, mTLS policy, subsets definitions of a service, etc

- ServiceEntry – explicitly add a service to Istio’s service registry

Running in Kubernetes, all of those configuration objects are implemented as CustomResourceDefinitions.

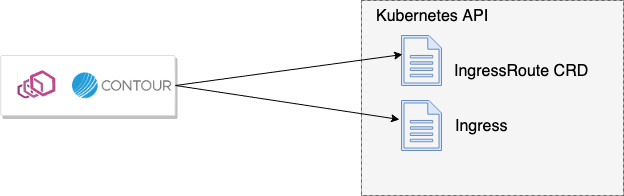

Heptio/VMWare Contour is intended as a Kubernetes ingress gateway and has a simplified domain-specific configuration model with both a CustomResourceDefinition (CRD) flavor as well as a Kubernetes Ingress resource

- IngressRoute which is a Kubernetes CRD that provides a single location to specify configuration for the Contour proxy

- Ingress Resource support which allows you to specify annotations on your Kubernetes Ingress resource if you’re in to that kind of thing

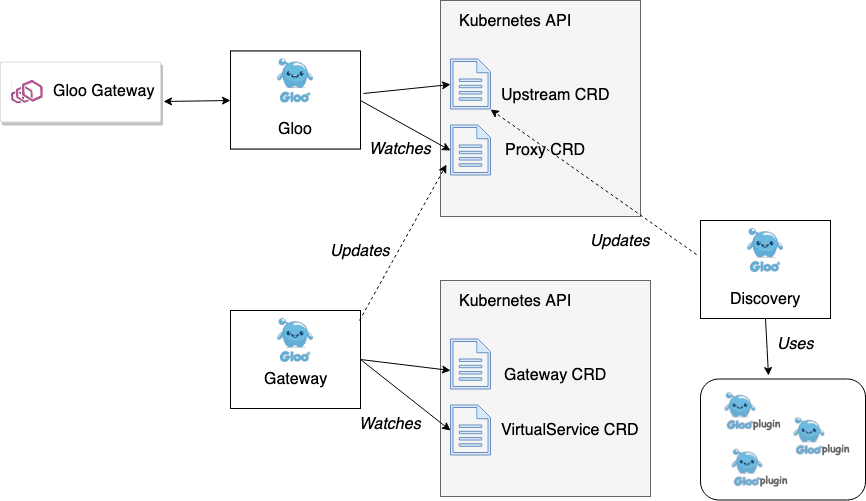

On the Gloo project we’ve made the decision to split the available configuration objects into two levels:

- The user-facing configurations for best ergonomics of user use cases and leave options for extensibility (more on that in next section)

- The lower-level configuration that abstracts Envoy but is not expressly intended for direct user manipulation. The higher-level objects get transformed to this lower-level representation which is ultimately what’s used to translate to Envoy xDS APIs. The reasons for this will be clear in the next section.

For users, Gloo focuses on teams owning their routing configurations since the semantics of the routing (and the available transformations/aggregation capabilities) are heavily influenced by the developers of APIs and microservices. For the user-facing API objects, we use:

- Gateway – specify the routes and API endpoints available at a specific listener port as well as what security accompanies each API

- VirtualService – groups API routes into a set of “virtual APIs” that can route to backed functions (gRPC, http/1, http/2, lambda, etc); gives the developer control over how a route proceeds with different transformations in an attempt to decouple the front end API from what exists in the backend (and any breaking changes a backend might introduce)

Note these are different than the Istio variants of these objects.

The user-facing API objects in Gloo drive the lower-level objects which are then used to ultimately derive the Envoy xDS configurations. For example, Gloo’s lower-level, core API objects are:

- Upstream – captures the details about backend clusters and the functions that are exposed on this. You can loosely associate a Gloo Upstream with an Envoy cluster with one big difference: An upstream can understand the actual service functions available at a specific endpoint (in other words, knows about

/foo/barand/bar/wineincluding their expected parameters and parameter structure rather than justhostname:port). More on that in a second. - Proxy – The proxy is the main object that abstracts all of the configuration we can apply to Envoy. This includes listeners, virtual hosts, routes, and upstreams. The higher-level objects (VirtualService, Gateway, etc) are used to drive this lower-level Proxy object.

The split between the two levels of configuration for the Gloo control allows us to extend the Gloo control-plane capabilities while keeping a simple abstraction to configure Envoy. This is explained in more detail in part 4 of this series.

In the previous three examples (Istio, Contour, Gloo) each respective control plane exposes a set of domain-specific configuration objects that are user focused but are ultimately transformed into Envoy configuration and exposed over the xDS data plane API. This provides a decoupling between Envoy and a user’s predisposed way of working and their workflows. Although we’ve seen a few examples of creating a more user and workflow focused domain-specific configuration for abstracting Envoy, that’s not the only way to build up an Envoy control plane. Booking.com has a great presentation on how they stayed much closer to the Envoy configurations and used an engine to just merge all the different teams’ configuration fragments into the actual Envoy configuration.

Alongside considering a domain-specific configuration, you should consider the specific touch points of your API/object model. For example, Kubernetes is very YAML and resource-file focused. You could build a more domain-specific CLI tool (like OpenShift did with the oc CLI, like Istio did with istioctl and like Gloo did with glooctl

Takeaway

When you build an Envoy control plane, you’re doing so with a specific intent or set of architectures/users in mind. You should take this into account and build the right ergonomic, opinionated domain-specific API that suits your users and improves your workflow for operating Envoy. The Gloo team recommends exploring existing Envoy control plane implementations and only building your own if none of the others are suitable. Gloo’s control plane lays the foundation to be extended and customized. As we’ll see in the next entry, it’s possible to build a control plane that is fully extendable to fit many different users, workflows, and operational constraints.

Published on Java Code Geeks with permission by Christian Posta, partner at our JCG program. See the original article here: Guidance for Building a Control Plane for Envoy Part 3 – Domain Specific Configuration API Opinions expressed by Java Code Geeks contributors are their own. |