What’s Minimum: Thinking About Minimum Viable Experiments

When I talk about Minimum Viable Products or Minimum Viable Experiments, people often tell me that their minimum is several weeks (or months) long. They can’t possibly release anything without doing a ton of work.

I ask them questions, to see if they are talking about a Minimum Indispensable Feature Set or a Minimum Adoptable Feature Set instead of an MVE or an MVP. Often, they are.

Yes, it’s possible you need a number of stories to create an entire feature set before you release it to your entire customer base. And, that’s not an MVP or an MVE.

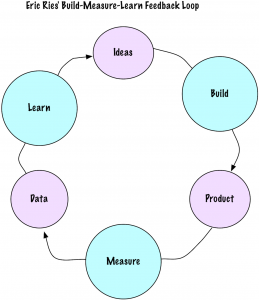

Do you know about Eric Ries’ Build-Measure-Learn loop? Or the Cynefin idea of small, safe-to-fail experiments? Here’s the thinking behind both of those ideas:

- You have ideas you could implement in your product. If you are like my clients, you have more ideas than you could implement ever. This is a good thing!

- You Build an idea for one product.

- You Measure the result with data.

- You Learn from that data to generate/reduce/change your ideas.

- Do it again until you’ve learned enough.

When I think about the Build-Measure-Learn loop and apply it to the idea of a Minimum Viable Experiment, I often discover these possibilities:

- We have an MVE now. We need to define how to measure it and use the data.

- We don’t have to do much to collect some data.

- We can ask this question: What do we want to know and why? What is the benefit of gathering that data?

Here’s an example of how this affected a recent client. They have an embedded system. They thought that if the embedded part booted faster, they could find more applications for the system. In embedded software, speed is often a factor.

They chose one client, who had systems now. The Product Manager visited the client and asked about other vertical applications within the organization. Did they have a need for something like this system?

Yes, they did. They were concerned about speed, not just boot speed, but application processing speed.

The Product Manager asked if they were willing to be part of an MVE. He explained that the team would watch how they implemented and used the embedded system. Yes, they would all sign non-disclosures. The client also had to know that the team might not actually implement for real what the experiment was.

The customer agreed. The team implemented four very small performance enhancements—only through the happy paths, no alternative/error paths—and visited the customer to see what would happen. It took the team three days to do this.

The team visited for one day to watch how the client’s engineers used the product.

They were astounded. Boot speed was irrelevant. One specific path through the processing was highly relevant. The other three were irrelevant for this specific customer.

This particular MVE was a little on the expensive side. It took a team-week to develop, measure, and learn. There was some paperwork that both sides had to manage. If you have a different kind of product, it might take you less time.

And, look how inexpensive that week was. That week taught the team what one vertical product line needed and didn’t need. They managed to avoid all those “necessary” features. This client didn’t need them. It turned out a different kind of vertical needed two of them, and no one appears to need the remaining one.

The Product Manager was able to prune many of the ideas in the backlog for this vertical market. The Product Owner knew and (knew why) which features were more important and how to write stories and rank them.

That’s one example of an MVE. Your experiments will probably look different. What’s key here is this question: What is the smallest thing you can measure that will provide you value so you can make a decision for the product?

| Reference: | What’s Minimum: Thinking About Minimum Viable Experiments from our JCG partner Johanna Rothman at the Managing Product Development blog. |