SELECT statements batch fetching with JDBC and Hibernate

Introduction

Now that I covered Hibernate batch support for INSERT, UPDATE and DELETE statements, it’s time to analyze SELECT statements result set batch fetching.

JDBC ResultSet fetching

The JDBC ResultSet offers a client-side Proxy cursor for fetching the current statement return data. When the statement gets executed, the result must be transferred from the database cursor to the client-side one. This operation can either be done at once or on demand.

There are three types of ResultSet cursors:

| Cursor Type | Description |

|---|---|

| TYPE_FORWARD_ONLY | This is the default ResultSet cursor type. The result set can only be moved forward and the resulted data can either be fetched at once or retrieved while the cursor is being iterated. The database can decide to fetch the data as it was available at the time the query started or as it is upon fetching. |

| TYPE_SCROLL_INSENSITIVE | The result set can be scrolled both forward and backward and the resulted data is insensitive to concurrent changes occurring while the cursor is still open |

| TYPE_SCROLL_SENSITIVE | The result set can be scrolled both forward and backward and the resulted data is sensitive to concurrent changes occurring while the cursor is still open. The data is therefore fetched on demand as opposed to being retrieved from a database cursor cache |

Not all database drivers implement all cursor types and the batch fetching behavior is controlled through the JDBC Statement fetchSize property, which according to the Javadoc:

Gives the JDBC driver a hint as to the number of rows that should be fetched from the database when more rows are needed for ResultSet objects generated by this Statement. If the value specified is zero, then the hint is ignored. The default value is zero.

The default fetching strategy is therefore database specific and from the application performance point of view, this aspect is very important when tuning the data access layer:

- OracleBy default, when Oracle JDBC runs a query, it retrieves a result set of 10 rows at a time from the database cursor.According to Oracle JDBC Driver documentation:What is “reasonable” depends on the details of the application. Oracle suggests the fetchSize be no more than 100, although in some cases larger size may be appropriate. A fetchSize of 100 may be inappropriately large for some queries even when many rows are returned.

- MySQLBy default, ResultSets are completely retrieved and stored in memory. In most cases this is the most efficient way to operate and, due to the design of the MySQL network protocol, is easier to implement.

- SQL ServerNormally, when the Microsoft JDBC Driver for SQL Server executes a query, the driver retrieves all of the results from the server into application memory. Although this approach minimizes resource consumption on the SQL Server, it can throw an OutOfMemoryError in the JDBC application for the queries that produce very large results.

- PostgreSQLBy default the driver collects all the results for the query at once. This can be inconvenient for large data sets so the JDBC driver provides a means of basing a ResultSet on a database cursor and only fetching a small number of rows.

- DB2By default the driver collects all the results for the query at once. This can be inconvenient for large data sets so the JDBC driver provides a means of basing a ResultSet on a database cursor and only fetching a small number of rows. The fetchSize property differs from the queryDataSize property. fetchSize affects the number of rows that are returned, and queryDataSize affects the number of bytes that are returned.

For example, if the result set size is 50 KB, and the value of queryDataSize is 32767 (32KB), two trips to the database server are required to retrieve the result set. However, if queryDataSize is set to 65535 (64 KB), only one trip to the data source is required to retrieve the result set.

Hibernate ResultSet traversing options

The Java Persistence Query interface offers only full-result retrievals, through the Query.getResultList() method call.

Hibernate also supports scrollable ResultSet cursors through its specific Query.scroll() API.

The only apparent advantage of scrollable ResultSets is that we can avoid memory issues on the client-side, since data is being fetched on demand. This might sound like a natural choice, but in reality, you shouldn’t fetch large result sets for the following reasons:

- Large result sets impose significant database server resources and because a database is a highly concurrent environment, it might hinder availability and scalability

- Tables tend to grow in size and a moderate result set might easily turn into a very large one. This kind of situation happens in production systems, long after the application code was shipped. Because users can only browse a relatively small portion of the whole result set, pagination is a more scalable data fetching alternative

- The overly common offset paging is not suitable for large result sets (because the response time increases linearly with the page number) and you should consider keyset pagination when traversing large result sets. The keyset pagination offers a constant response time insensitive to the relative position of the page being fetched

- Even for batch processing jobs, it’s always safer to restrict processing items to a moderate batch size. Large batches can lead to memory issues or cause long-running transactions, which increase the undo/redo transaction log size

Testing time

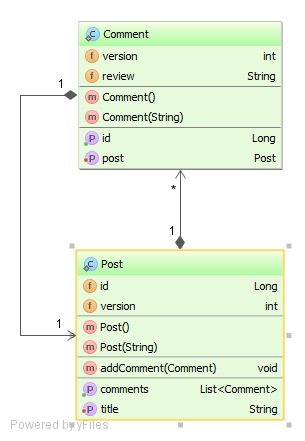

Our domain entity model looks like this:

The following test will be use for validating various result set fetching behaviors:

@Test

public void testFetchSize() {

doInTransaction(session -> {

int batchSize = batchSize();

for(int i = 0; i < itemsCount(); i++) {

Post post = new Post(String.format(

"Post no. %d", i));

int j = 0;

post.addComment(new Comment(

String.format(

"Post comment %d:%d", i, j++)));

post.addComment(new Comment(

String.format(

"Post comment %d:%d", i, j++)));

session.persist(post);

if(i % batchSize == 0 && i > 0) {

session.flush();

session.clear();

}

}

});

long startNanos = System.nanoTime();

LOGGER.info("Test fetch size");

doInTransaction(session -> {

List posts = session.createQuery(

"select p " +

"from Post p " +

"join fetch p.comments ")

.list();

LOGGER.info("{}.fetched {} entities",

getClass().getSimpleName(),

posts.size());

});

LOGGER.info("{}.testFetch took {} millis",

getClass().getSimpleName(),

TimeUnit.NANOSECONDS.toMillis(

System.nanoTime() - startNanos

));

}To configure Hibernate to use an explicit Statement fetchSize, we need to set the following Hibernate property:

properties.put("hibernate.jdbc.fetch_size", fetchSize());Every test will insert 5000 Post entities, each one having 2 Comments.

One commercial database

The first tests are run against a commercial database with the following results:

| Fetch Size | Duration [millis] |

|---|---|

| 1 | 1190 |

| 10 | 640 |

| 100 | 481 |

| 1000 | 459 |

| 10000 | 449 |

| Default (10) | 545 |

The larger the fetch size, the less round trips are required for fetching the whole result set. If the returned rows contain many columns, a larger fetch size would require proportionally larger database buffers.

PostgreSQL

The second test round is run against PostgreSQL 9.4 with the following results:

| Fetch Size | Duration [millis] |

|---|---|

| 1 | 1181 |

| 10 | 572 |

| 100 | 485 |

| 1000 | 458 |

| 10000 | 437 |

| Default (all) | 396 |

The default fetch size yields the best result, even when the fetchSize is equal to the total number of rows being returned. Since there is no upper-bound buffer limit, the default fetch size can cause OutOfMemoryError issues when retrieving large result sets.

Conclusion

While most database serves don’t impose a default upper limit for the result set fetch size, it’s a good practice to limit the whole result set (if requirements allow it). A limited size result set should address the unbounded fetch size shortcoming, while ensuring predictable response times even when the queried data grows gradually. The shorter the queries, the quicker the row-level locks are released and the more scalable the data access layer becomes.

- Code available on GitHub.

| Reference: | SELECT statements batch fetching with JDBC and Hibernate from our JCG partner Vlad Mihalcea at the Vlad Mihalcea’s Blog blog. |