Resizing the HashMap: dangers ahead

I recently stumbled upon a bug caused by improper usage of java.util.HashMap from multiple threads. The bug was an excellent example of the leaking abstractions. Only the knowledge of the implementation-level details of the data structures helped me solve the issue at hand. So I hope that sharing the problem I faced will encourage some of our readers to familiarize themselves with the ways basic data structures are implemented.

The symptoms I faced raised their ugly head on a day where certain analysis processes which normally take just minutes to complete had been running for hours. Being a true believer in our craft I was timely notified by our own monitoring software and started to investigate the cause.

I also had several thread dumps available from the processing threads. They indicated that the code was just processing entries at the hashmap found inside the heap dump, seemingly in an unterminated loop. So it appeared that the data being analyzed was somehow corrupted, containing a circular reference.

To my surprise, this was indeed the case. The HashMap entries inside the analyzed heap content were referencing one another. When designing the heap analysis algorithms we never expected this to be possible. Apparently we were wrong.

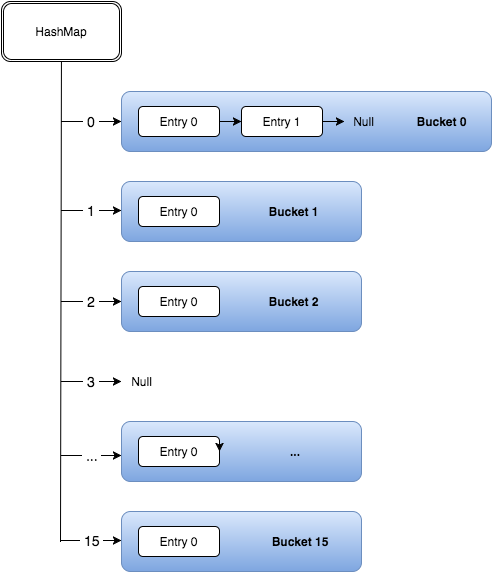

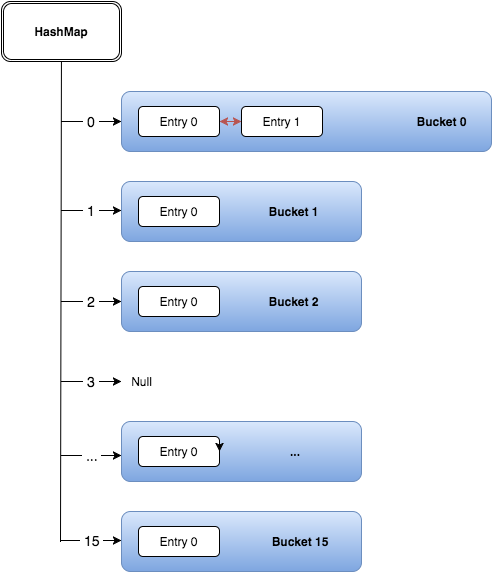

As the HashMap implementation is known not to be threadsafe, I was now suspecting it is somehow related to concurrency issues with HashMap usage. And indeed, there was a problem hidden in the design of the java.util.HashMap. As I am sure you are aware, a HashMap consists of array of buckets with each bucket referring to a linked list of entries. The entries in turn refer to the next entry in list until the last entry refers to null:

What our analyser got stuck with was the situation where two entries referred to one another forming a closed cycle.

With the help of Google I discovered how one can end up creating such circular references an issue in a multithreaded environment. As you again are likely aware, the HashMaps are resized dynamically during runtime, based on the number of entries in the map. By default, the HashMaps uses a load factor of 75%. This means that whenever the number of entries in the map exceeds 75% of the available capacity, the map size is increased to avoid too many collisions on map element entries.

So here I had it. Apparently multiple threads had attempted to resize the map at the same time, creating a loop in some of the buckets. The culprit was eventually hidden in the following lines in the Java HashMap source code:

void transfer(Entry[] newTable, boolean rehash) {

... skipped for brevity ...

Entry next = e.next;

if (rehash) {

e.hash = null == e.key ? 0 : hash(e.key);

}

... skipped for brevity ...

}The solution from our analytics endpoint was now easy. We just had to keep a ledger about the processed entries and not process any of the entries twice was all we needed.

I do believe this serves as a great example about failing abstractions. The HashMaps in Java are well built and tend serve you well, even if you do not understand the implementation details. Until they don’t. In such cases, the in-depth knowledge about the data structure implementation details will make all the difference for you.

| Reference: | Resizing the HashMap: dangers ahead from our JCG partner Nikita Salnikov Tarnovski at the Plumbr Blog blog. |

Can you please clarify where is this leaky abstraction?

You clearly violated documentation as I can understand. Java documentation clearly states: Note that this implementation is not synchronized. If multiple threads access a hash map concurrently, and at least one of the threads modifies the map structurally, it must be synchronized externally

What exactly did you expect?

Java provides java.util.concurrentConcurrentHashMap for thread safety

As others have suggested the HashMap is not thread safe. It means that sharing an instance among multiple threads is undeterministic. I don’t understand how have you fixed the concyrrency problem without synchronization.