Run 2,000 Docker Containers In A Single Weave Cluster Of 30 Rackspace Cloud Servers With 2GB Of Memory Each

This is the second blog of a 2-blog series about the scalability of the DCHQ platform using Weave as the underlying network layer. The first blog covered the deployment of 10,000 containers on 10 Weave Clusters, each having 3 Cloud Servers on Rackspace with 4GB of Memory and 2 CPUs.

In this blog, we will cover the deployment of 2,000 containers — but instead of using 10 clusters with 3 cloud servers each, we will be using a single Weave cluster with 30 cloud servers. DCHQ not only automates the application deployment and management, but it also automates the infrastructure provisioning & auto-scaling on 13 different clouds & virtualization platforms. Read more about this scalability test in this blog.

Background

While application portability (i.e. being able to run the same application on any Linux host) is still the leading driver for the adoption of Linux Containers, another key advantages is being able to optimize server utilization so that you can use every bit of compute. Of course, for upstream environments, like PROD, you may still want to dedicate more than enough CPU & Memory for your workload – but in DEV/TEST environments, which typically represent the majority of compute resource consumption in an organization, optimizing server utilization can lead to significant cost savings.

This all sounds good on paper — but DevOps engineers and infrastructure operators still struggle with the following questions:

- How can I group servers across different clouds into clusters that map to business groups, development teams, or application projects?

- How do I monitor these clusters and get insight into the resource consumption by different groups or users?

- How do I set up networking across servers in a cluster so that containers across multiple hosts can communicate with each other?

- How do I define my own capacity-based placement policy so that I can use every bit of compute in a cluster?

- How can I automatically scale out the cluster to meet the demands of the developers for new container-based application deployments?

DCHQ, available in hosted and on-premise versions, addresses all of these challenges and provides the most advanced infrastructure provisioning, auto-scaling, clustering and placement policies for infrastructure operators or DevOps engineers.

- A user can register any Linux host running anywhere by running an auto-generated script to install the DCHQ agent, along with Docker and the software-defined networking layer (optional). This task can be automated programmatically using our REST API’s for creating “Docker Servers” (https://dchq.readme.io/docs/dockerservers)

- Alternatively, DCHQ integrates with 13 cloud providers, allowing users to automatically spin up virtual infrastructure on vSphere, OpenStack, CloudStack, Amazon Elastic Cloud Computing, Google Compute Engine, Rackspace, DigitalOcean, SoftLayer, Microsoft Azure, and many others.

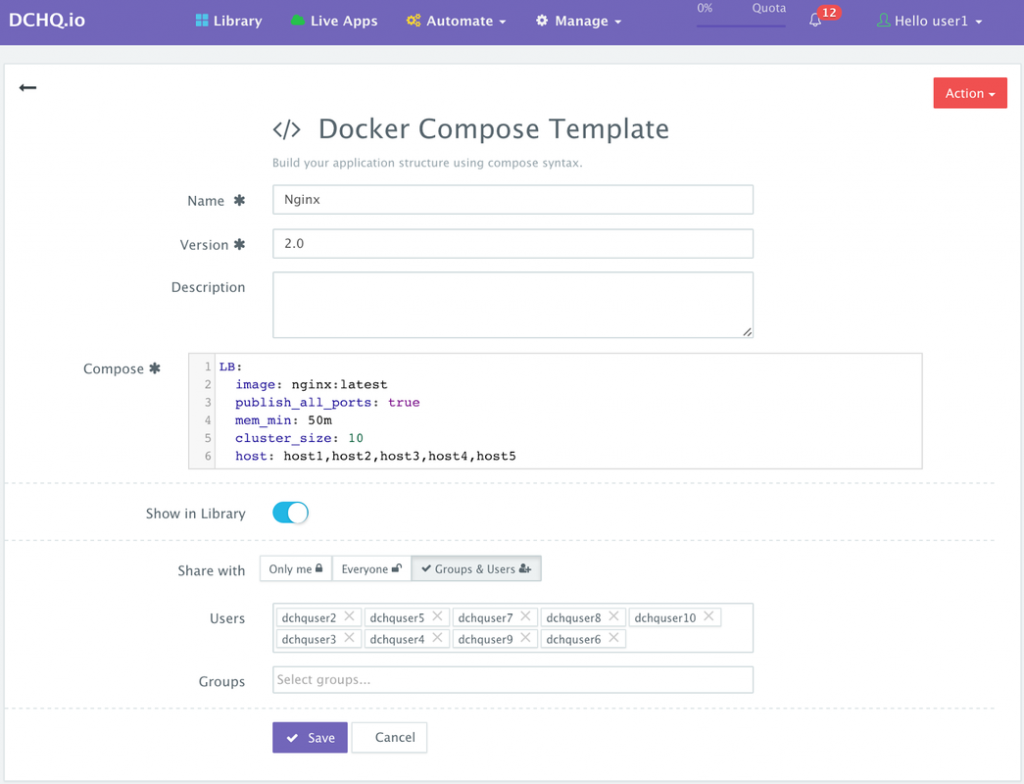

Building The Application Template For The Nginx Cluster

Once logged in to DCHQ (either the hosted DCHQ.io or on-premise version), a user can navigate to Manage > App/Machines and then click on the + button to create a new Docker Compose template.

We have created a simple Nginx Cluster for the sake of this scalability test. You will notice that the cluster_size parameter allows you to specify the number of containers to launch (with the same application dependencies).

The mem_min parameter allows you to specifcy the minimum amount of Memory you would like to allocate to the container.

The host parameter allows you to specify the host you would like to use for container deployments. That way you can ensure high-availability for your application server clusters across different hosts (or regions) and you can comply with affinity rules to ensure that the database runs on a separate host for example. Here are the values supported for the host parameter:

- host1, host2, host3, etc. – selects a host randomly within a data-center (or cluster) for container deployments

- <IP Address 1, IP Address 2, etc.> — allows a user to specify the actual IP addresses to use for container deployments

- <Hostname 1, Hostname 2, etc.> — allows a user to specify the actual hostnames to use for container deployments

- Wildcards (e.g. “db-*”, or “app-srv-*”) – to specify the wildcards to use within a hostname

Provisioning The Underlying Infrastructure On Any Cloud

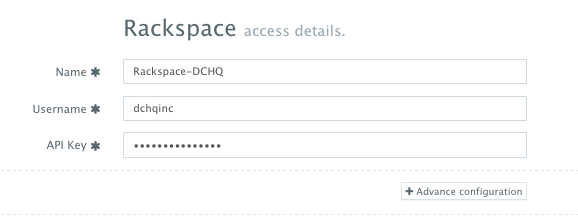

Once an application is saved, a user can register a Cloud Provider to automate the provisioning and auto-scaling of clusters on 13 different cloud end-points including vSphere, OpenStack, CloudStack, Amazon Web Services, Rackspace, Microsoft Azure, DigitalOcean, HP Public Cloud, IBM SoftLayer, Google Compute Engine, and many others.

First, a user can register a Cloud Provider for Rackspace (for example) by navigating to Manage > Repo & Cloud Provider and then clicking on the + button to select Rackspace. The Rackspace API Key needs to be provided – which can be retrieved from the Account Settings section.

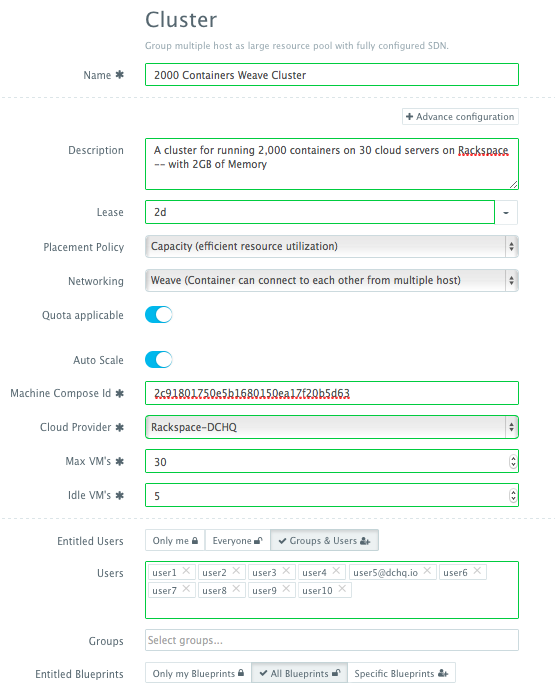

A user can then create a cluster with an auto-scale policy to automatically spin up new Cloud Servers. This can be done by navigating to Manage > Clusters page and then clicking on the + button. You can select a capacity-based placement policy and then Weave as the networking layer in order to facilitate secure, password-protected cross-container communication across multiple hosts within a cluster. In this case, we also defined an auto-scale policy that will automatically spin up cloud servers in this cluster up to the maximum limit defined (in this case 30 cloud servers). Granular entitlements are available to make sure that this cluster can only be used by the 10 users we’re using in this test (user1@dchq.io, user2@dchq.io, …, user10@dchq.io). Lastly, entitlements can be defined at the blueprint (or application template) level — to ensure that only the entitled applications can be deployed to this shared cluster. In this case, the cluster was open to “All Blueprints”.

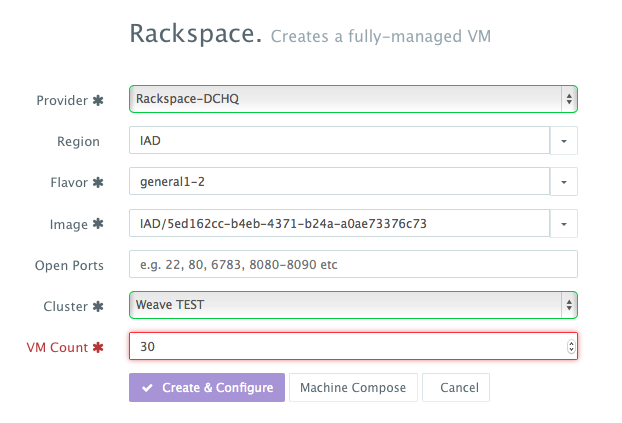

A user can now provision a number of Cloud Servers on the newly created cluster either through the UI-based workflow or by defining a simple YAML-based Machine Compose template that can be requested from the Self-Service Library.

UI-based Workflow – A user can request Rackspace Cloud Servers by navigating to Manage > Hostsand then clicking on the + button to select Rackspace. Once the Cloud Provider is selected, a user can select the region, size and image needed. Ports are opened by default on Rackspace Cloud Servers to accommodate some of the port requirements (e.g. 32000-59000 for Docker, 6783 for Weave, and 5672 for RabbitMQ). A Cluster is then selected and the number of Cloud Servers can be specified.

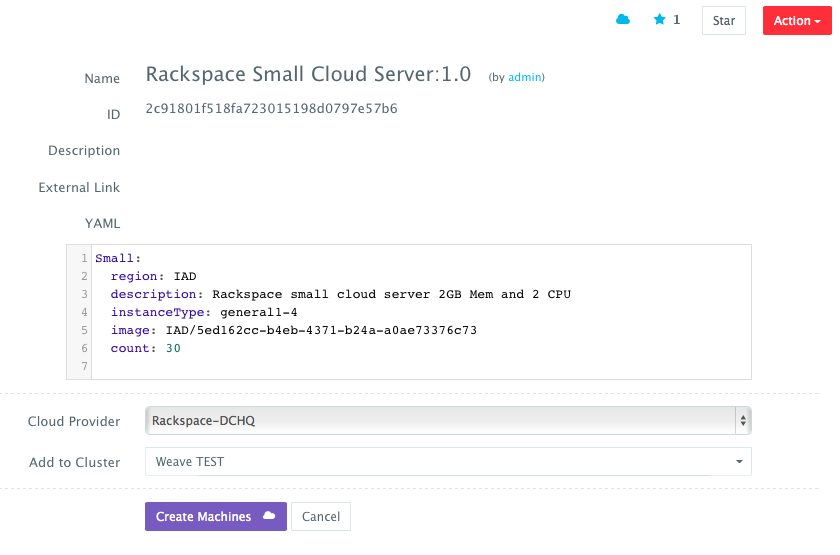

YAML-based Machine Compose Template – A user can first create a Machine Compose template for Rackspace by navigating to Manage > Templates and then selecting Machine Compose.

The supported parameters for the Machine Compose template are summarized below:

- description: Description of the blueprint/template

- instanceType: Cloud provider specific value (e.g. general1-4)

- region: Cloud provider specific value (e.g. IAD)

- image: Mandatory – fully qualified image ID/name (e.g. IAD/5ed162cc-b4eb-4371-b24a-a0ae73376c73 or vSphere VM Template name)

- username: Optional – only for vSphere VM Template username

- password: Optional – only for vSphere VM Template encrypted password. You can encrypt the password using the endpoint https://www.dchq.io/#/encrypt

- network: Optional – Cloud provider specific value (e.g. default)

- securityGroup: Cloud provider specific value (e.g. dchq-security-group)

- keyPair: Cloud provider specific value (e.g. private key)

- openPorts: Optional – comma separated port values

- count: Total no of VM’s, defaults to 1.

Once the Machine Compose template is saved, a user can request this machine from the Self-Service Library. A user can click Customize and then select the Cloud Provider and Cluster to use for provisioning these Rackspace Cloud Servers.

Deploying The Nginx Cluster Programmatically Using DCHQ’s REST API’s

Once the Cloud Servers are provisioned, a user can deploy the Nginx cluster programmatically using DCHQ’s REST API’s. To simplify the use of the API’s, a user will need to select the cluster created earlier as the default cluster. This can be done by navigating to User’s Name > My Profile, and then selecting the default cluster needed.

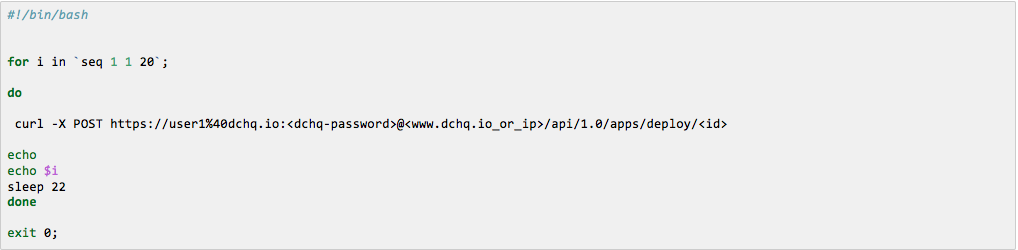

Once the default cluster is selected, then a user can simply execute the following curl script that invokes the “deploy” API (https://dchq.readme.io/docs/deployid).

In this simple curl script, we have the following:

- A for loop, from 1 to 20

- With each iteration we’re deploying the clustered Nginx application using the default cluster assigned to user1@dchq.io

- user1%40dchq.io is used for user1@dchq.io where @ symbol is replaced by hex %40

- @ between password & host is not replaced by hex

- <id> refers to the Nginx cluster application ID. This can be retrieved by navigating to the Library > Customize for the Nginx cluster. The ID should be in the URL

- sleep 22 is used between each iteration

- After running this script, 200 Nginx containers would have been deployed by user1@dchq.io — 20 iterations deploying an Nginx cluster of 10 containers each.

We then repeated the same process for the other users (user2@dchq.io, user3@dchq.io, …, user10@dchq.io) — all deploying to the same shared cluster.

You can try out this curl script yourself. You can either install DCHQ On-Premise (http://dchq.co/dchq-on-premise.html) or sign up on DCHQ.io Hosted PaaS (http://dchq.io).

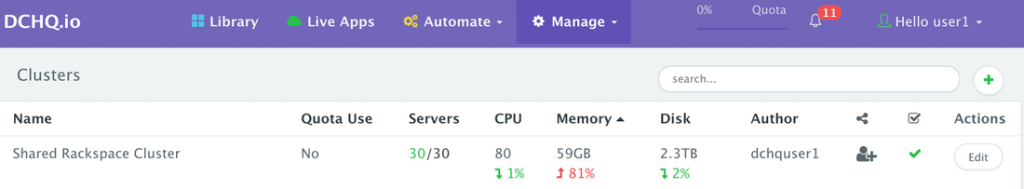

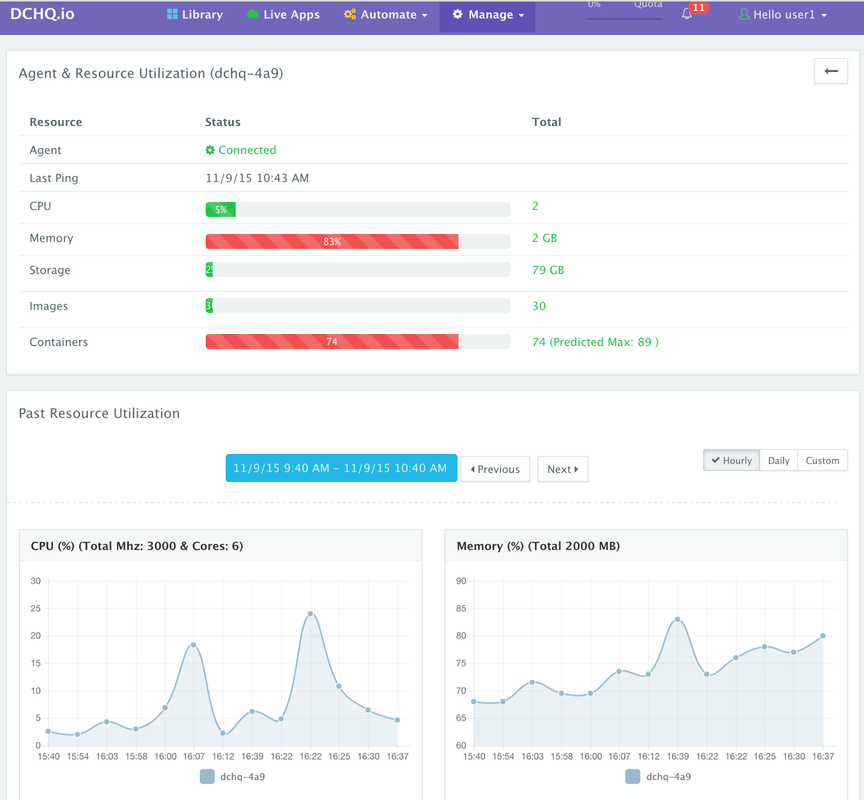

Monitoring The CPU, Memory & I/O Utilization Of The Cluster, Servers & Running Containers

DCHQ allows users to monitor the CPU, Memory, Disk and I/O of the clusters, hosts and containers.

- To monitor clusters, you can just navigate to Manage > Clusters

- To monitor hosts, you can just navigate to Manage > Hosts > Monitoring Icon

- To monitor containers, you can just navigate to Live Apps > Monitoring Icon

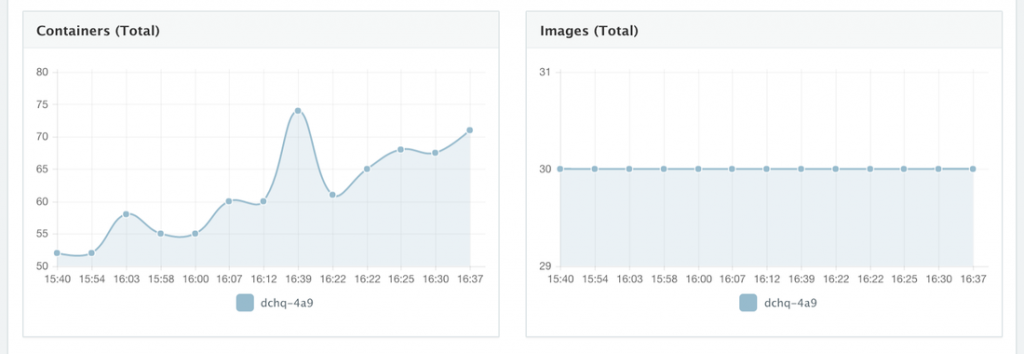

We tracked the performance of the hosts and cluster before and after we launched the 2,000 containers.

After spinning up 2,000 containers, we’ve captured screenshots of the performance charts for the cluster. You can see that the aggregated Memory utilization across the 30 cloud servers in the cluster was at 81%.

You can see that the highest Memory utilization across the 30 cloud servers in the cluster was at 84%.

When we drilled down into one of the 30 hosts in the cluster and saw more details like the # of containers running on that particular host, the number of images pulled and of course, the CPU/Memory/Disk Utilization. In this case, the memory utilization of that particular host was 83%.

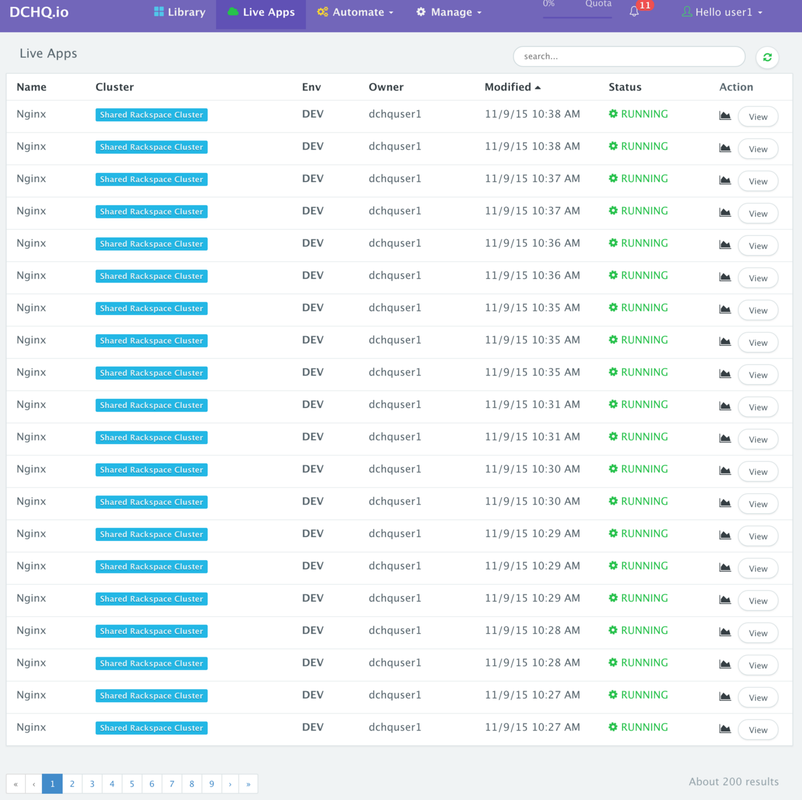

Here’s a view of all running 200 Nginx clusters (where each cluster had 10 containers).

Conclusion

Orchestrating Docker-based application deployments is still a challenge for many DevOps engineers and infrastructure operators as they often struggle to manage pools of servers across multiple development teams where access controls, monitoring, networking, capacity-based placement, auto-scale out policies and quota are key aspects that need to be configured.

DCHQ, available in hosted and on-premise versions, addresses all of these challenges and provides the most advanced infrastructure provisioning, auto-scaling, clustering and placement policies for infrastructure operators or DevOps engineers.

In addition to the advanced infrastructure provisioning & clustering capabilities, DCHQ simplifies the containerization of enterprise applications through an advance application composition framework that extends Docker Compose with cross-image environment variable bindings, extensible BASH script plug-ins that can be invoked at request time or post-provision, and application clustering for high availability across multiple hosts or regions with support for auto scaling.

- Sign Up for FREE on http://DCHQ.io or download DCHQ On-Premise

to get access to out-of-box multi-tier Java application templates along with application lifecycle management functionality like monitoring, container updates, scale in/out and continuous delivery.

| Reference: | Run 2,000 Docker Containers In A Single Weave Cluster Of 30 Rackspace Cloud Servers With 2GB Of Memory Each from our JCG partner Amjad Afanah at the DCHQ.io blog. |