Emotion-Based Computing: What It Is and How You Can Benefit

Emotions should not be discarded as a distraction. Understanding a pattern in a user’s emotion is important in order for an intelligent device or system to respond appropriately. A system can exhibit “artificial emotion” to engage with the user. Interactive intelligent systems may be more acceptable in human society if emotions are involved in their relationship with humans.

Emotions in this context can be defined as a state of mind resulting from circumstances or the mood of an individual. Emotions are natural and instinctive. In this blog post, I will discuss the process of both capturing emotions and understanding their pattern. This emotional data can be analyzed in order to discover customer insights, offer recommendations, or respond to a customer.

Identify Emotions

Emotion is fundamental to human experience. Consider these four basic emotions:

- Happiness

- Angry

- Sad

- Surprised

There is an emerging field called affective computing, which aims to bridge the gap between human emotions and computational technology—it’s the study and development of systems and devices that interpret, process and simulate human affects. For example, facial expression, posture, gesture, speech, or the temperature change of a user’s hand on a mouse can signify changes in a user’s emotional state. To understand the importance of emotions, let’s look at three case studies:

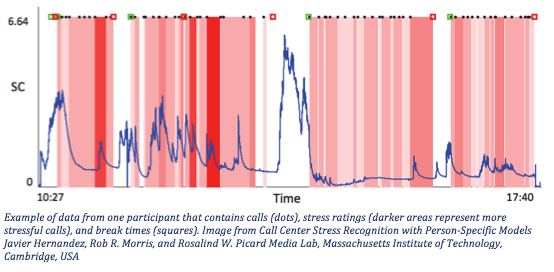

Case Study 1: MIT’s Automatic Stress Recognition in Call Center Employees

In this study, researchers used sensors to measure stress levels in call center employees during good and bad calls. By employing machine learning techniques, they were able to automatically identify stress levels in calls. This data can be used to help prevent chronic psychological stress.

- Scenario: 9 call center employees, 1500 calls

- Results: Achieved a 78.03% accuracy rate across participants when trained and tested on different days from the same person

Case Study 2: Shopmobia: An Emotion-Based Shop Rating System

In this study, researchers wanted to demonstrate the possibility of using affective computing to analyze consumer behavior towards shopping mall stores. They measured customer satisfaction in a shopping environment through the use of a wearable biosensor that was used to measure the electro dermal activity (EDA) of the shopper. By triggering positive emotions through enhanced services and ad campaigns, consumers can have a more positive shopping experience. This study is from the ACII ’13 Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction, pages 745-750. Authors of the study were Nouf Alajmi, Eiman Kanjo, Nour El Mawass, and Alan Chamberlain.

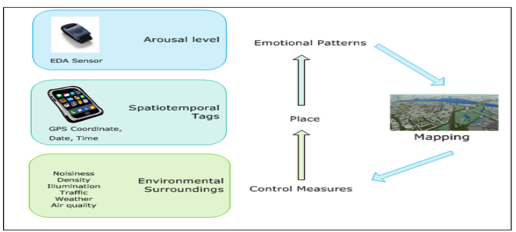

Case Study 3: Sense of Space: Mapping Physiological Emotion Response in Urban Space

In this study, researchers measured people’s emotional reactions in locations by monitoring their physiological signals that are related to emotion. By integrating wearable biosensors with mobile phones, they obtained geo-annotated data relating to emotional states in relation to spatial surroundings. They visualized the emotional response data by creating an emotional layer over a geographical map. This helped the researchers to understand how people emotionally perceive urban spaces and helped them illustrate the interdependency between emotions and environmental surroundings.

Image from UbiComp 2013 Adjunct Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing adjunct publication, pages 1321-1324. Luluah Al-HusainKing, Saud University, Riyadh, Saudi Arabia; Eiman KanjoKing, Saud University, Riyadh, Saudi Arabia; Alan Chamberlain, University of Nottingham, Nottingham, United Kingdom.

What can we infer from these case studies?

- Factors such as stress can have a huge influence on emotion

- Data from emotions can help improve customer experience

- There is an interdependency between emotion and environment

Can a computing system exhibit emotion?

Yes.

Artificial Emotions and Interactive Intelligent Agents

Emotions can be used to engage a human and a system/robot in a meaningful dialogue. Some of the research in the past revolved around finding answers to the following questions:

- Is a human happy interacting with a robot?

- How much time was a robot in a happy state, and what made the robot change to a sad state?

- How long did a particular interaction go well, and after how much elapsed time was the interaction was not synchronous?

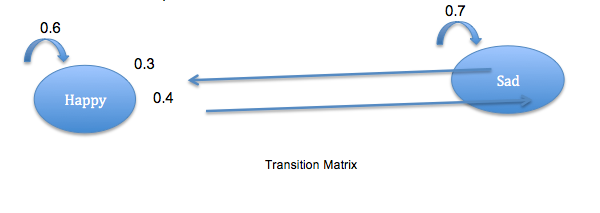

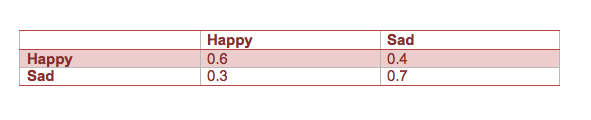

How to Model Emotions

- Conceptualize social interactions as a sequence of states

- T. Leary came up with a system in 1957 of coding interpersonal behavior based on two bipolar dimensions: friendliness, extreme hostility, and dominance versus submission. He used the Markov chain to accomplish this. Markov chains have many applications as statistical models of real-world processes.

We Can Model Emotions Using the Markov Chain:

- Sequences can be modeled using Markov chains

- A Markov chain is a random process that transitions from one state to another

- The next state only depends on the current state, not on the sequence of events that preceded it

Chocolate Theory

What can you infer from these statements?

Statement 1: I like chocolate; I buy chocolate but I don’t when I am too happy

Statement 2: I like chocolate; I buy chocolate only when I am happy

By reading these two statements, we can infer that there are things we like, but it depends on the circumstances. For example, I like to watch comedy shows. Sometimes my app will recommend that I watch a comedy show, but it doesn’t consider the fact that I may not like to watch a comedy show, depending on my mood at the time.

Key Takeaways: Emotions in Big Data Science

We are now able to capture a large amount of emotional data, and this data will continue to grow exponentially. By collecting millions of emotion data points, researchers can improve a machine’s ability to read human emotions, resulting in new insights, improved customer satisfaction, and more precise predictive analytics.

| Reference: | Emotion-Based Computing: What It Is and How You Can Benefit from our JCG partner Anwar Adil at the Mapr blog. |