Don’t Like Throttling?

You don’t have a choice – the underlying system (The JVM here will do it for you).

I still recall the summer of 2013 when I was running a project and it was 1 URL in my whole of application that brought the servers down. The problem was simple – a bot decided to index our site at a very high rate and the bot was creating a millions of URL combinations which bypassed all of my caching layer and they were all hitting my application servers. Well we had a very high cache rate in the application (95%) or so and the application server layer was not designed for a high load (it was Adobe AEM 5.6 and the logic to do searches and make pages was very computational heavy). Earlier that year we wanted to handle the case of Dog-Pile effect and we had spoken about having some sort of throttling in place. At the start of the conversation every one frowned about the idea of throttling the same (except 2 people).

In fall of 2012 Ravi Pal had suggested to have error handling in place such that a system should not just fall on it’s head but degrade gracefully. I only realized the gravity of his suggesting when we hit this problem in 2013.

Now I am here working on yet another platform and the minute I bring up the idea of throttling, it’s being frowned upon again. One guy actually laughed at me in a meeting. One other person suggested that we want to handle the scenario by “Auto-scale” instead of throttling the same. We have our infrastructure on AWS Cloud and I am not expert but the experts tell me a server can be replicated as-is in around 10 minutes (we will be proving benchmarking this out very soon).

I was an ambitious architect who though I controlled the traffic coming to my site. I no longer live in that illusion.

This may be a series of posts, but today here I start off with showcasing that you do not have a choice and whether you like it not, the system will throttle your traffic for you.

The Benchmark Overview

- A simple Web application built using Spring Boot

- A Spring MVC REST controller that will accept some HTTP Requests and send back a OK response after a induced delay

- jMeter to simulate a load

- A custom plugin (a big shoutout to these guys for the plugin) to generate stepped load and capture custom enhanced graphs

- Tomcat 8.x to host the web site – launched in memory using Spring Boot. No customizations done

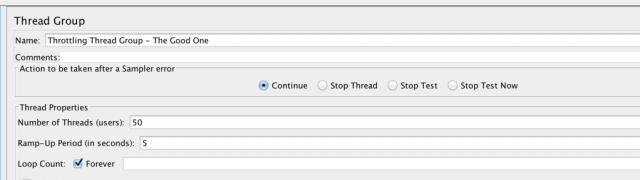

First Groups – The Good One

Test Plan

This Thread Group is going to simulate a consistent stream of requests to our application server. A typical scenario that happens very often.

Server Performance

As Expected? Yes.

As you see below, the chart shows that the application server is behaving normally. All the requests over a time period of 15 minutes is consistent with a “single user model” aka 1 second request response time.

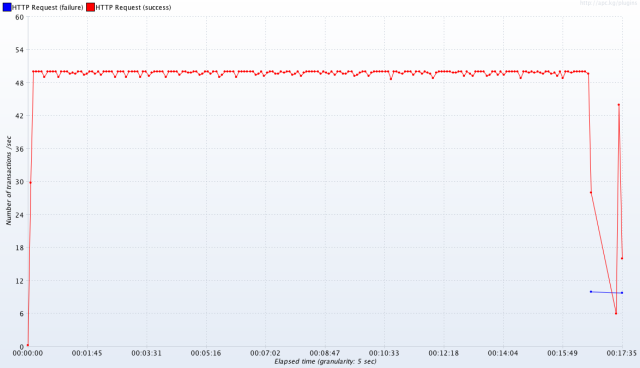

Second Group – The Sudden High Traffic

Test Plan

This test plan is a stepped approach and it’s trying to simulate a scenario where a campaign will start hitting a certain page (or set of pages) for a short duration. This is a use case we see most often in the industry where our websites are open to the whole world to hit.

this thread group is not OOTB and I downloaded a plugin

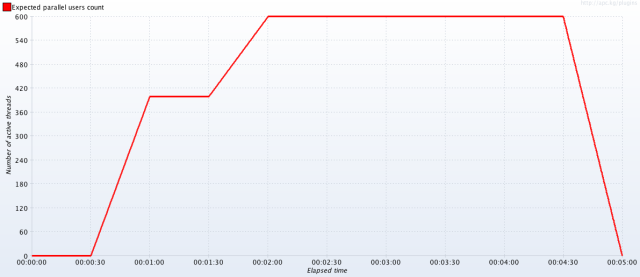

Server Performance

So what do we expect to happen? Depending on how much juice my server has (threads, cpu cycles, etc.), my server may or may not be able to handle the requests. Given I am running everything on my local laptop, it will be interesting if my local box can handle 600 threads on.

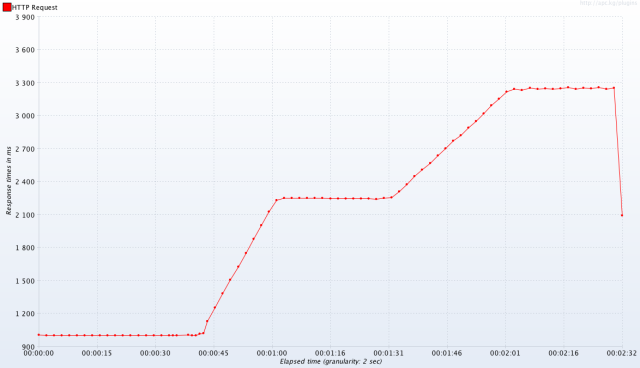

And we see that my laptop cant really handle 600 thread. So what does tomcat do?

It Throttles

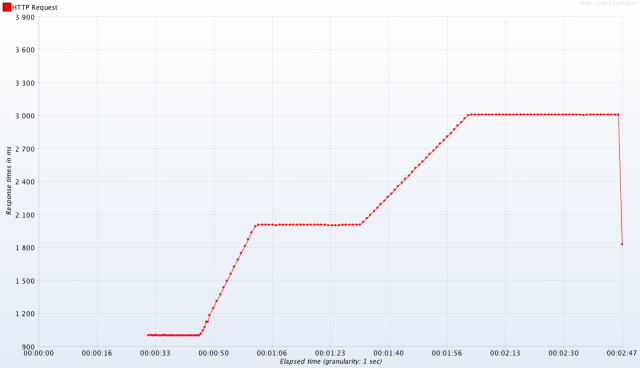

How the Good One changes behave

Test Plan

I run the 1st test plan and follow it up with the high traffic plan (introducing a 30 second delay).

Impact

The following image shows how the Good One has been impacted. While the traffic for The Good One has not changed a bit, it has still been impacted because something else introduced a spike.

Please go and tell the JVM that you do not like throttling

So What’s Next

You have really 3 choices (we will look into details of each of the following in separate posts)

- Auto-scale the application servers and hope that the new servers are ready in time to handle the load or;

- Do something about throttling and control your destiny – what if the high traffic is not a revenue generation resource and the Good One was?

- Continue to frown upon Throttling

| Reference: | Don’t Like Throttling? from our JCG partner Kapil Viren Ahuja at the Scratch Pad blog. |