Creating a Cross-platform Docker Development Environment

How many times have you read this statement:

“The great thing about Docker is that your developers run the exact same container as what runs in production.”

Docker is all the hype these days, and with statements like that, many are wondering how they can get on board and take advantage of whatever it is that makes Docker so popular.

That was us just six months ago when we started playing with Docker and trying to fit it into our processes. After just a few months we knew we liked it and wanted to run apps this way, but we were struggling with some of the Docker development workflow.

As the manager of a development team, I like using the same processes and technologies through the whole lifecycle of our applications. When we were running apps on AWS OpsWorks using Chef to provision servers and deploy applications, we used Vagrant with Chef to run the same recipes locally to build our development environment.

Challenges with a Docker development environment

It didn’t take long for us developing with Docker to realize that the common statement isn’t as easy to achieve as it sounds.

This article highlights the top six challenges we faced when trying to create a consistent Docker development environment across Windows, Mac, and Linux:

- Running Docker on three different platforms

- Docker Compose issues on Windows

- Running minimal OS in vagrant (boot2docker doesn’t support guest additions)

- Write access to volumes on Mac and Linux

- Running multiple containers on the same host port

- Downloading multiple copies of docker images

Running Docker on multiple operating systems

Docker requires Linux. If everyone runs Linux this really isn’t an issue, but when a team uses multiple OSes, it creates a significant difference in process and technology. The developers on our team happen to use Windows, Mac, and Linux, so we needed a solution that would work consistently across these three platforms.

“We needed a dev environment solution that would work consistently across three platforms.” via…

Docker provides a solution for running on Mac and Linux called boot2docker. boot2docker is a minimal Linux virtual machine with just enough installed to run Docker. It also provides shell initialization scripts to enable use of Docker command line tools from the host OS (Windows or Mac), mapping them into the Docker host process running inside the boot2docker VM. Combined with VirtualBox, this provides an easy way to get Docker up and running on Windows or Mac.

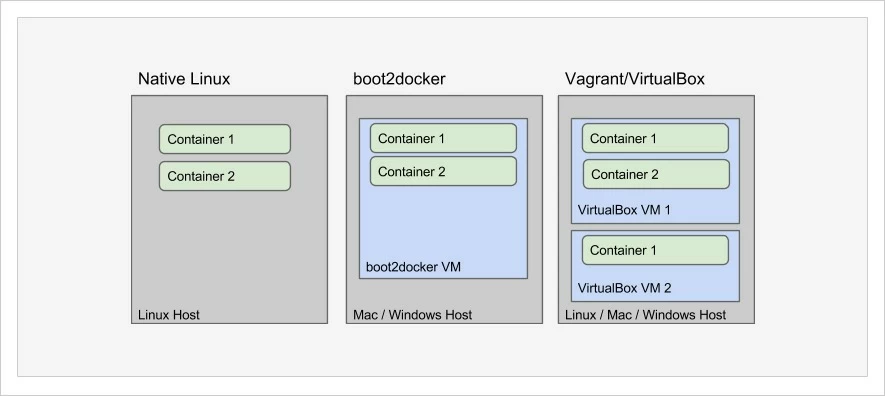

While boot2docker works well for simple use cases, it makes certain conditions difficult to work with. I’ll get into those in the following challenges. This topic can be hard to understand at first, so here’s a simple illustration of the three main options for running Docker locally:

Using Docker Compose on Windows

Docker Compose is a fantastic tool for orchestrating multiple container environments and in many ways actually makes Docker usable for development. If one had to run all the normal Docker CLI commands and flags to spin up their environments and link them properly, it would be more work than many of us are willing to do.

Compose is still relatively new though, like Docker itself really, and as a result it does not work very well on Windows yet. There are so many issues on Windows in fact, that there is an epic on the project just to deal with them: https://github.com/docker/compose/issues/1085. Some good news though is Docker Toolbox claims Compose support for Windows is coming soon.

(re)Enter Vagrant

I mentioned earlier that boot2docker works well to create a Linux VM for running Docker in but that it did not work well for all conditions.

Vagrant has been a fantastic tool for development teams for the past few years, and when I started working with Docker I was even a little sad to be moving away from it. After a couple months of struggling to get everything working with boot2docker though, we brought Vagrant back into the equation.

We liked how small boot2docker was since we didn’t need a full featured Docker host, but unfortunately it doesn’t support the VirtualBox guest additions required for synced folders. Thankfully though we found the vagrant box AlbanMontaigu/boot2docker that was a version of boot2docker with guest additions installed and weighs in at a light 28M. Compare that with a minimal Ubuntu 14.04 box at 363M.

Write access on volumes

Docker can mount the host filesystem into containers as volumes. This is great when the container only needs to read the files, but if the container needs to write changes to the files there can be a problem.

On Windows VirtualBox, synced folders are world-writeable with Linux permissions of 777. So on Windows, write access is not an issue. However on Linux and Mac, there are file ownership and permissions to work with. For example, when I’m writing code on my Mac, my username is shipley, and my uid/gid is 1000. However, in my container, Apache runs as www-data with uid/gid of 33.

So when I want Apache to generate files that I can access on my host machine to continue development with, Apache is not allowed to write to them because it runs as a different user. My options would either be to change ownership/permissions of files on the Mac filesystem, or change the user and uid/gid apache runs as in the container to shipley and 1000. However, that option is pretty sloppy and does not work for team development.

With VirtualBox, you can change the user/group and permissions that synced folders are mounted as, but it’s not really easy or convenient by default. Vagrant provides a very convenient way to do this though. This was one of the biggest motivators for us to go back to Vagrant. With Vagrant, all we need to add to our Vagrantfile is:

config.vm.synced_folder "./application", "/data", mount_options: ["uid=33","gid=33"]

With the extra mount_options, it will own the /data folder inside the vm as uid/gid 33 which, inside an Apache container based on Ubuntu, will map to user/group www-data.

Funny thing though — as I mentioned earlier, by default the filesystem permissions on Windows are 777. So write access there isn’t an issue. However, we found that when using volumes in Docker to mount a custom my.cnf file into a mariadb container that mariadb doesn’t like it when the configuration file is world writeable. So again, Vagrant helps us out by making it simple to also set file permissions in the mount:

config.vm.synced_folder "./application", "/data", mount_options: ["uid=33","gid=33","dmode=755","fmode=644"]

Running multiple containers that expose same port

My team primarily develops web applications, so for us each of our projects/applications expose port 80 for HTTP access during development.

While boot2docker for Windows/Mac and native Docker on Linux makes getting started quick and easy, you can only have one container bound to a given port on the host. So when we’re developing multiple applications or multiple components of an application that expose the same port, it doesn’t work. This really isn’t a show stopper, but it is an inconvenience as it requires running apps on non-standard ports, which just gets awkward to work with and hard to remember.

Running each app however in its own VM via vagrant solves this problem. Of course it introduces a couple more issues though; like now you have to access the app via an IP address or map a hostname to it in your hosts file. This really isn’t that bad though since you should only have to do it once per app.

Another problem this solution introduces is running multiple VMs requires a lot more memory. It also seems a bit counterproductive since Docker is supposed to remove the burden of running full VMs. Anyway, it’s a small price to pay to have multiple apps running at the same time and accessible on the same ports.

Downloading multiple copies of Docker images

The most annoying problem created by this solution though is, now that Docker is running in multiple VMs, each one needs to download any dependent Docker images. This just takes more time and bandwidth, and if we developers hate one thing, it’s waiting.

We were able to get creative though, and on bootup of the VM, we check the host machine folder defined by environment variable DOCKER_IMAGEDIR_PATH for any Docker images, and if found it will docker load them. Then after docker-compose up -d is completed, any new images that have been downloaded are copied into the DOCKER_IMAGEDIR_PATH folder.

Bingo, only need to download each image once now.

Conclusion

After running into all these challenges and finding solutions to them, we now have a simple Vagrantfile we can copy into each of our projects in order to provide a consistent development experience regardless of what operating system the developer is using. We’re using it in multiple projects today and have even gotten to the stage of continuous integration and blue/green deployment to Amazon Elastic Container Service in production (but those are topics for another article).

I expect we’ll face more challenges as time goes by and our projects change, and as we do we’ll continue to evolve our solutions to account for them. Our Vagrantfile is open source, and we welcome suggestions and contributions.

Feel free to post your questions and comments below to make this article more meaningful, and hopefully we can address issues you’re facing too.

Resources

- Docker

- Docker Compose

- Docker Toolbox

- VirtualBox

- Vagrant

- boot2docker

- Custom boot2docker vagrant box with Guest Additions installed

- Our vagrant-boot2docker project

“Creating a consistent cross-platform Docker development environment” via @codeship

| Reference: | Creating a Cross-platform Docker Development Environment from our JCG partner Phillip Shipley at the Codeship Blog blog. |