Five Reasons Why High Performance Computing (HPC) startups will explode in 2015

1. The size of the social networks grew beyond any rational expectations

Facebook(FB) official stats state that FB has 1.32 billion and 1.07 billion mobile monthly active users. Approximately 81.7% are outside the US and Canada. FB manages a combined of 2.4 Billion users, including mobile with 7,185 employees.

The world population as estimated by United Nations as of 1 July 2014 at 7.243 billion. Therefore 33% of the world population is on FB. This includes every infant and person alive, and makes abstraction if they are literate or not.

Google reports 540 million users per month plus 1.5 billion photos uploaded per week. Add Twitter, Quora, Yahoo and a few more we reach 3 billion plus people who write emails, chat, tweet, write answers to questions and ask questions, read books, see movies and TV, and so on.

Now we have the de-facto measurable collective unconscious of this word, ready to be analyzed. It contains information of something inside us that we are not aware we have. This rather extravagant idea come from Carl Jung about 70 years ago. We should take him seriously as his teachings led to the development of Meyer Briggs and a myriad of other personality and vocational tests that proved amazingly accurate.

Social media life support profits depends on meaningful information. FB reports revenues of $2,91 billion per Q2 2014, and only $0.23 billion come from user payments or fees. 77% of all revenues are processed information monetized through advertising and other related services.

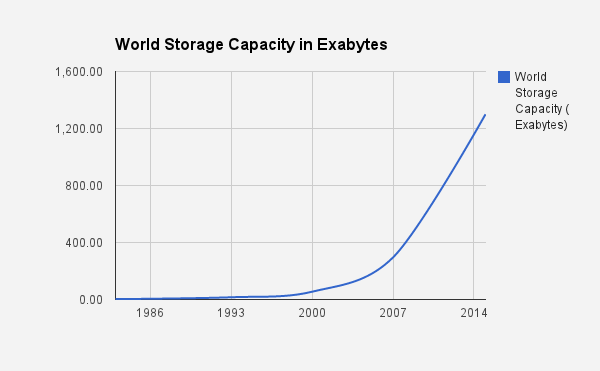

The tools of the traditional Big Data (the only data there is, is big data) are no longer sufficient. A few years ago we were talking in the 100 million users range, Now the data sets are in exabyte and zettabyte dimensions.

1 EB = 1000^6 bytes = 10^18 bytes = 1 000 000 000 000 000 000 B = 1 000 petabytes = 1 million terabytes = 1 billion gigabytes

1 ZB = 1,000 EB

I compiled this chart from information published. It shows the growth of the world’s storage capacity, assuming optimal compression, over the years. The 2015 data is extrapolated from Cisco and crosses one zettabyte capacity.

2. The breakthrough in high throughput and high performance computing.

The successful search for Higgs particle exceeds anything in terms of data size analyzed:

The amount of data collected at the ATLAS detector from the Large Hadron Collider (LHC) in CERN, Geneva is described like this:

If all the data from ATLAS would be recorded, this would fill 100,000 CDs per second. This would create a stack of CDs 450 feet high every second, which would reach to the moon and back twice each year. The data rate is also equivalent to 50 billion telephone calls at the same time. ATLAS actually only records a fraction of the data (those that may show signs of new physics) and that rate is equivalent to 27 CDs per minute.

It took 20 years and 6,000 scientists. They created a grid which has a capacity of 200 PB of disk and 300,000 cores, with most of the 150 computing centers connected via 10 Gbps links.

A new idea, the Dynamic Data Center Concept did not make it yet mainstream, but it would great if it does.

This concept is described in a different blog entry. Imagine every computer and laptop of this world plugged into a worldwide cloud when not in use and withdrawn just as easy storage USB card. Mind boggling, but this will be one day reality.

3. The explosion of HPC startups in San Francisco, California

There is a new generation of performance computing physicists who senses the affinities of social networks with super computing. All are around 30 years old and you can meet some of them attending this meetup. Many come from Stanford and Berkeley, and have previously worked in Open Science Grid (OSG) or Fermi Lab but went to settle on the West Coast. Other are talented Russians, – with the same talent as Sergei Brin from Google. They are now now happily American . Some extraordinary faces are from China and India.

San Francisco is a place where being crazy is being normal. Actually for me all are “normal” in San Francisco. HPC needs a city like this, to rejuvenate HPC thinkers and break away from the mentality where big bucks are spent for gargantuan infrastructures, similar to the palace of Ceausescu in Romania. The dictator had some 19 churches, six synagogues and 30,000 homes demolished. No one knew what to do with the palace. The dilemma was to make it a shopping center or the Romanian Parliament. Traditional HPC has similar stories, like Waxahacie

Watch what I say about user experience in this video. 95% of the scientists do not have access to super commuting marvels. I say we must make high performance computing accessible to every scientist. In its’ ultimate incarnation, any scientists can do higgs-like-events searches on lesser size data and be most of the time successful.

See for example PiCloud. See clearly how it works. All written in Python. See clearly how much it costs. They still have serious solutions for Academia and HPC.

For comparison look at HTCondor documentation, see the installation or try to learn something called dagman. Simply adding a feature, no one paid attention to make it easy to learn and use.

I did work with HTCondor engineers and let me say it, they are of the finest I ever met. All they need an exposure to San Francisco in a consistent way.

4. Can social networks giants acquire HPC HTC competency using HR?

No. They can’t. Individual HPC employees recruited through HR will not create a new culture. They will mimic the dominant thinking inside groups and loose original identity and creativity. As Drop-box wisely discovered, the secret is to acquihire, and create an internal core competency with a startup who delivers something they don’t have yet.

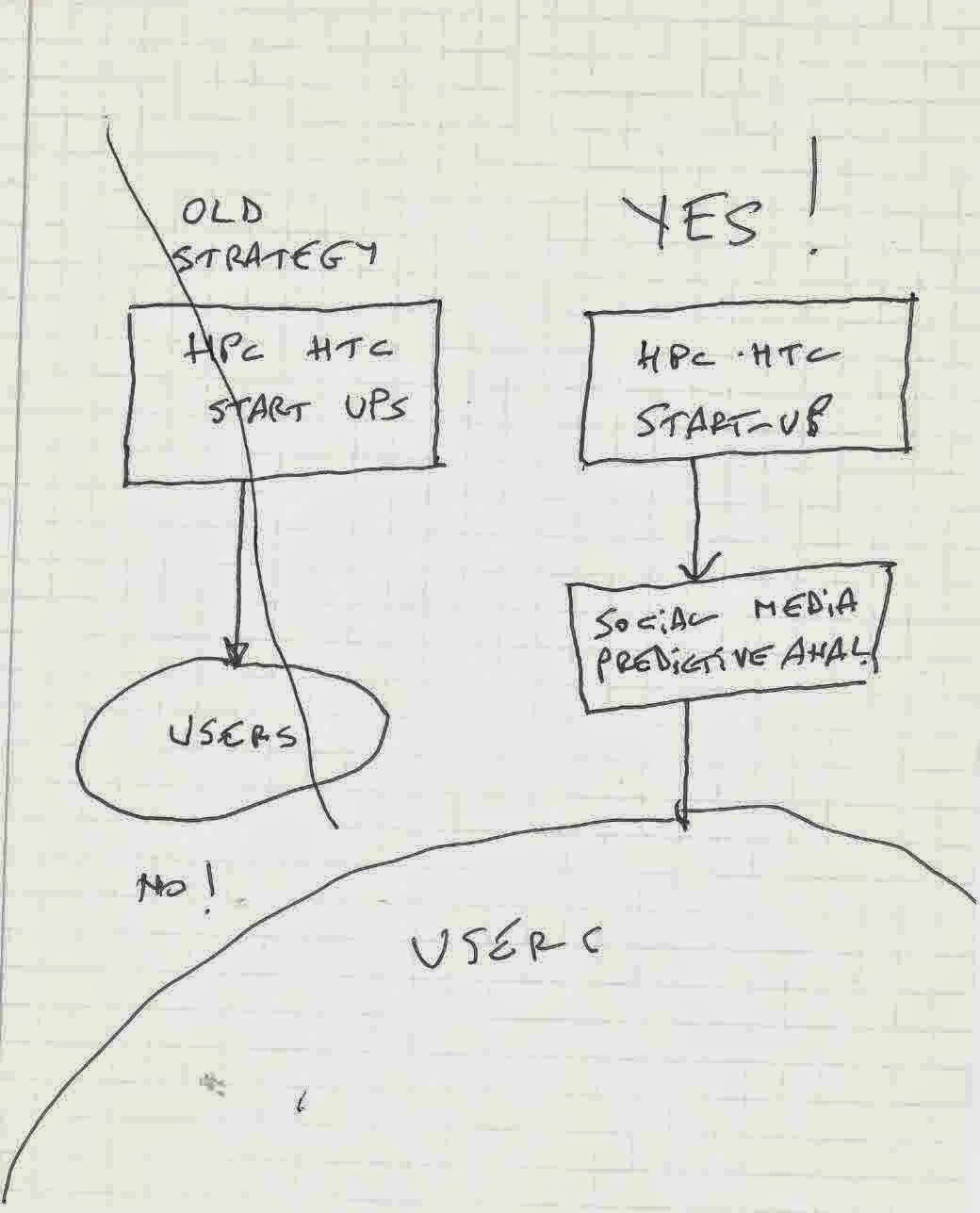

5. The strategy to make HPC / HTC start ups successful.

Yes it is hard to have 1 million users as PiCloud. Actually, it is impossible.

But PiCloud technology can literally deliver hundreds of millions dollars via golden discoveries using HPC / HTC in social company that already have 100 million users and more.

The lesson we learn is this: HPC / HTC cannot parrot the social media business model of accumulating millions, – never mind billions – of users.

Success is not made up of features. Success is about making someone happy. You have to know that someone. Social networks are experts in making easy for people to use everything they offer.

And HPC /HTC should make the social media companies happy. It is only through this symbiosis HPC/HTC – on one side – and Social Media plus Predictive Analytics everywhere – on the other side – that high performance computing will be financially successful as a minimum viable product (MVP).

| Reference: | Five Reasons Why High Performance Computing (HPC) startups will explode in 2015 from our JCG partner Miha Ahronovitz at the The memories of a Product Manager blog. |

Yes, I’m already developing my Facebook and Google as we speek and I’m planning to build Large Hadron Collider next week. I’m expecting HPC explosion anytime now.

Seriously guys, does anybody thinks that any of Big Data stuff is actualy relevant for more then 0.5% of developers reading this article?

Interesting? Yes. Practical? No.

@ Martin Venek. Watch

Video: Notes About User Experience in HPC | The memories of a Product Manager

http://bit.ly/1DsOnFp

You probably heard the expression: “only in America”

:-)

Miha