Building a nirvana

What started out three years ago as in a form of a single ANT file has grown into literally a hundred of build tasks taking more than eight hours to complete from start to finish on sequential run. In this post we describe some of the tools and techniques we have applied into our build infrastructure.

We have had four different goals in mind when creating our build:

- Automated functional tests. We employ no dedicated QA personnel and intend to keep it that way.

- Automated compatibility tests. Plumbr is being used on a more than a 100 different platforms, so we need those environments to be created and tested automatically.

- Release automation. We do not want to stay up late in the evening manually going through steps of production update, so we need to automate everything along the way.

- Feature isolation. We want to isolate new features from the stable codebase, so we can test out the added features in isolation and merge the code to stable only when we have matured the change.

I can say we have achieved all four. But it has definitely not been a walk in the park – the sheer number of tools and technologies used is not for the faint-hearted as seen from the following story.

Version Control

First and foremost – our source code is backed by Mercurial repository. New features are isolated into feature branches, bugfixes are directly applied into default branch. When we release a version we just tag a corresponding changeset in the default branch. We have two different Mercurial repositories – one for our Java projects and one for the native code.

To keep the post simpler, the rest of the post focuses on how we build the Java projects and treat the native build as an external dependency.

Build artifacts

Our application consists of two separate build artifacts

- plumbr.war – user interface of the product, packaged as a standard Java EE web application

- plumbr.zip, consisting of two different parts

- plumbr.jar – javaagent attached to the Java process responsible for monitoring the performance.

- platform-specific native libraries (dll, so, jnilib) – native agents attached to the Java process.

The artifacts are published into the repository. We are using Artifactory for this.

Build tools

Build orchestration

Here we use Jenkins to monitor the changes in our VCS repository. Whenever a new changeset is detected, Jenkins is responsible of pulling the relevant change and applying the required builds for the change.

The build starts by building the source code. We use a multi-project Gradle script to build the Java artifacts (JAR and WAR) and run unit tests. Unit tests are run as TestNG tests.

Next in line is acquiring the native dependencies. The pre-built natives are downloaded from Artifactory repository and placed next to the built JAR right before composing the plumbr.zip.

Now we have the Plumbr client (packaged in a downloadable ZIP) and server (packaged as WAR) ready to be published to the Artifactory.

Acceptance build

We now have our deliverables in Artifactory (in WAR and ZIP) and we know that the unit tests have passed on those platforms. But we are nowhere near to the production launch yet, as we have not ran the cross-platform acceptance tests on the deliverables.

For this, we have a scheduled acceptance Jenkins jobs running each night. These jobs start by checking whether there is a new artifact published after the last acceptance test was run. If there indeed is at least one, then the newest of those artifacts is used to run as acceptance tests.

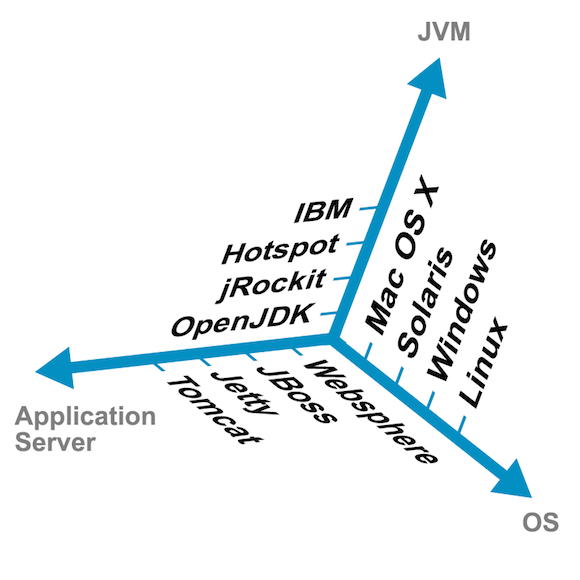

For this, we need the infrastructure. We need to support different operating systems – Mac OS X, Linux, Windows and Solaris, so we need a machine with each of the builds to support. But the platform complexities do not stop at OS level. All of the following can be thought of a separate dimension in our build matrix:

- bitness – 32 and 64bit environments

- different Java versions (6, 7, 8)

- different vendors (Oracle Hotspot, Oracle jRockit, IBM, OpenJDK).

- different Java EE containers (Tomcat, Jetty, JBoss, et al).

Based on the platform popularity, we have cut some corners here and there. But even with the trimmed matrix, we have 120 different platforms our acceptance tests must pass.

We create those virtual machines needed for the test by using Vagrant scripts. Those scripts result in a virtual machine with OS on it. Next in line are the Ansible playbooks, installing the required JVM and application server to the Vagrant-created box.

Now we have our infrastructure ready for the acceptance tests to run. Acceptance tests in short are a set of applications being either deployed to an application server or ran in standalone mode. Plumbr is then attached to the application and users are being emulated to verify that we indeed are able to find all known performance problems in those applications.

Release

When the acceptance tests are successful, we are ready to release. When acceptance tests have successfully we immediately trigger a production update with the help of LiveRebel:

- Redeploying a new UI as a WAR file. This way we have a new version of the user interface released on a nightly basis.

- Updating the nightly version of the Plumbr client (packaged as plumbr.zip) in our production.

So whenever the acceptance tests passed during the night, there is a new version of Plumbr available for our users.

Conclusion

We can assure you we have achieved our goals.

- Updating production is done 7AM each morning without any human intervention. When we arrive to the office we can just enjoy the fresh version in production. Or, as I have to admit has happened once during the last 60 days, fix what was broken.

- We test on 120 different environments during the acceptance phase. All this with no human intervention. As the infrastructure is built orthogonally to the tests themselves, supporting more platforms is relatively easy.

- Testing the new features is as easy as pressing a single button in build orchestration software, after which a new environment is created from the scratch with all the required infrastructure and proper application versions. When the product owner has validated the deliverable, we just merge it back to the default branch and let the automation to take it from there.

- Regression tests run without any human intervention as a set of unit/acceptance tests.

If it all looks like a nirvana, then – indeed, it is a nice thing to have. We still have some issues to iron out, but all-in-all we are rather happy with the maturity we have achieved. But before you decide that “yes, this is what I want”, I must warn you – there is a price on this pleasure.

First and foremost, your team must feel comfortable with technologies. Vagrant, Ansible, LiveRebel, TestNG, Gradle, Jenkins, Artifactory – this is actually just a subset of the tools keeping our build together. When you feel your team is mature enough to tackle the challenge then the next obstacle is the sheer time you need to spend.

The build infrastructure itself can be built with just couple of man-months. But the cost really kicks in at the moment when you require the tests themselves to be reliable enough to have the automated release cycles. And this is something that needs large investments. As of now we spend 20% of our engineering time in creating and maintaining the tests. And I can assure that especially in the beginning it does not look like a time well spent. On the other hand, if you check what we have achieved, maybe it is something you can consider …

| Reference: | Building a nirvana from our JCG partner Nikita Salnikov Tarnovski at the Plumbr Blog blog. |