Finding long tail suggestions using Lucene’s new FreeTextSuggester

Lucene’s suggest module offers a number of fun auto-suggest implementations to give a user live search suggestions as they type each character into a search box.

For example, WFSTCompletionLookup compiles all suggestions and their weights into a compact Finite State Transducer, enabling fast prefix lookup for basic suggestions.

AnalyzingSuggester improves on this by using an Analyzer to normalize both the suggestions and the user’s query so that trivial differences in whitespace, casing, stop-words, synonyms, as determined by the analyzer, do not prevent a suggestion from matching.

Finally, AnalyzingInfixSuggester goes further by allowing infix matches so that words inside each suggestion (not just the prefix) can trigger a match. You can see this one action at the Lucene/Solr Jira search application (e.g., try “python”) that I recently created to eat our own dog food. It is also the only suggester implementation so far that supports highlighting (this has proven challenging for the other suggesters).

Yet, a common limitation to all of these suggesters is that they can only suggest from a finite set of previously built suggestions. This may not be a problem if your suggestions are past user queries and you have tons and tons of them (e.g., you are Google). Alternatively, if your universe of suggestions is inherently closed, such as the movie and show titles that Netflix’s search will suggest, or all product names on an e-commerce site, then a closed set of suggestions is appropriate.

N-Gram language models

For everyone else, where a high percentage of the incoming queries fall into the never-seen-before long tail, Lucene’s newest suggester, FreeTextSuggester, can help! It uses the approach described in this Google blog post.

Rather than precisely matching a previous suggestion, it builds up a simple statistical n-gram language model from all suggestions and looks at the last tokens (plus the prefix of whatever final token the user is typing, if present) to predict the most likely next token.

For example, perhaps the user’s query so far is: “flashforge 3d p”, and because flashforge is an uncommon brand of 3D printer, this particular suggestion prefix was never added to the suggester. Yet, “3d printer” was a frequently seen phrase in other contexts (different brands). In this case, FreeTextSuggester will see “3d” and the “p” prefix for the next token and predict printer, even though “flashforge 3d printer” was never explicitly added as a suggestion.

You specify the order (N) of the model when you create the suggester: larger values of N require more data to train properly but can make more accurate predictions. All lower order models are also built, so if you specify N=3, you will get trigrams, bigrams and unigrams, all compiled into a single weighted FST for maximum sharing of the text tokens. Of course, larger N will create much larger FSTs. In practice N=3 is the highest you should go, unless you have tons of both suggestions to train and RAM to hold the resulting FST.

To handle sparse data, where a given context (the N-1 prior words) was not seen frequently enough to make accurate predictions, the suggester uses the stupid backoff language model (yes, this is really its name, and yes, it performs well!).

I expect the best way to use this new FreeTextSuggester will be as a fallback: you would first use one of the existing exact match suggesters, but when those suggesters fail to find any suggestions for a given query, because it’s “unusual” and has crossed over into the long tail, you then fall back to FreeTextSuggester.

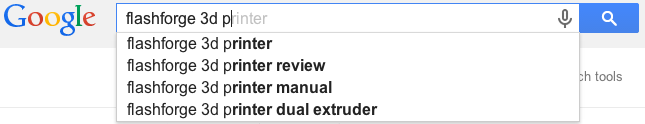

Google seems to use such a modal approach to suggestions as well: if you type “flashforge 3d p” you should see something like this, where each suggestion covers your entire query so far (indeed, Google has heard of the flashforge brand of 3d printer!):

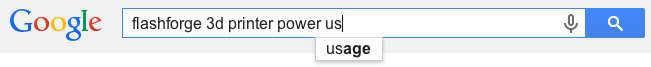

But then if you keep typing and enter “flashforge 3d printer power u”, the suggestions change: instead of suggesting an entire query, matching everything I have typed, Google instead suggests the last word or two:

As usual, this feature is very new and likely to contain exciting bugs! See the Jira issue, LUCENE-5214, for details. If you play with this new suggester please start a discussion on the Lucene’s user list!