Micro jitter, busy waiting and binding CPUs

Performance profiling a new machine

When I work on a new machine, I like to get an understanding of it’s limitations. In this post I am looking at the jitter on the machine and the impact of busy waiting for a new PC I built this weekend. The specs for the machine are interesting but not the purpose of the post. Never the less they are:

- i7-3970X six core running at 4.5 GHz (with HT turned on)

- 32 GB of PC-1600 memory

- An OCZ RevoDrive 3, PCI SSD (actual write bandwidth of 600 MB/s)

- Ubuntu 13.04

Note: the OCZ RevoDrive is not officially supported on Linux, but is much cheaper than their models which are.

Tests for jitter

My micro jitter sampler looks at interrupts to a running thread. It is similar to jHiccup but instead of measuring how delayed a thread is in waking up, it measures how delays a thread gets once it has started running. Surprisingly how you run your threads impacts the sort of delays it will see once it wakes up.

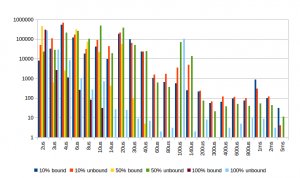

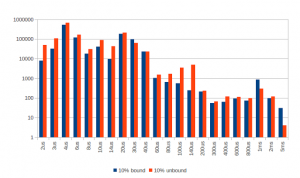

This chart is a bit dense. It shows the number of interrupts of a range of time occurred within a CPU hour on average (each test was run for more than two clock hours) There raw data is available HERE

The interesting differences are in how binding to an isolated CPU and/or busy waiting threads are handled by the OS.

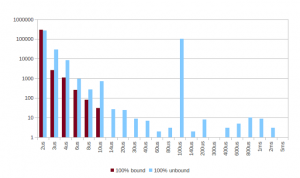

Busy waiting

In the case of busy waiting, binding to an isolated core really helps reduce the higher latency gaps.

These tests were run at the same time. The only difference is that the “bound” thread was bound to an “isolcpus” CPU where the other CPU for that core was isolated as well. i.e. the whole core was isolated.

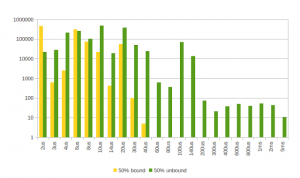

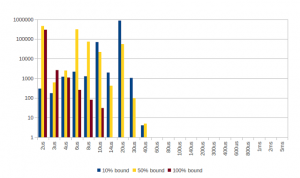

Fairly busy – 50%

In this case, the thread alternated between sampling for 1 milli-second and sleeping for 1 milli-second

The unbound 50% busy thread had much lower delays of 2 micro-seconds, but significantly more of longer delays.

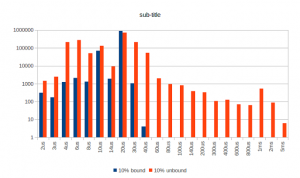

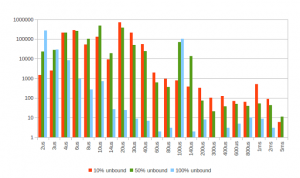

Slightly busy – 10%

In this test, the sampler runs for 0.111 millis-seconds and sleeps for 1 milli-second. Even in this case, binding to an isolated CPU makes a difference.

Binding but not isolating – 10%

In this case, the bound thread was not isolated. It was bound to a CPU where the core wasn’t free and it was not isolated either. It would appear that binding alone makes little difference compared to unbound in this test.

Comparing bound and isolated threads

Something I have seen before, but I find a little strange is that if you give up the CPU your thread performs badly when it wakes up. Previously I had put this down the caches not being warmed up, but the code does very little access to memory and the code is very short so it is still possible but unlikely. The peak at 20 micro-seconds at one million per hour could be due to a delay which happens on every forth wake up. It is about 90,000 clock cycles which seems like a lot for a cache miss.

Comparing unbound threads

In this chart, it suggest it really helps to be greedy of the CPU even if you are not bound. Busy threads get interrupted less. It is hard to say that 50% busy is better than 10% busy. It might be, but a longer test would be needed (I would say it’s within the margin of error)

Conclusion

Using thread affinity, without isolating the CPU doesn’t appear to help much on this system. I suspect this is true of other versions of Linux and even Windows. Where affinity and isolation helps, it may still make sense to busy wait as it appears the scheduler will interrupt the thread less often if you do.