How expensive is a method call in Java

We have all been there. Looking at the poorly designed code while listening to the author’s explanations about how one should never sacrifice performance over design. And you just cannot convince the author to get rid of his 500-line methods because chaining method calls would destroy the performance.

Well, it might have been true in 1996 or so. But since then JVM has evolved to be an amazing piece of software. One way to find out about it is to start looking more deeply into optimizations carried out by the virtual machine. The arsenal of techniques applied by the JVM is quite extensive, but lets look into one of them in more details. Namely method inlining. It is easiest to explain via the following sample:

int sum(int a, int b, int c, int d) {

return sum(sum(a, b),sum(c, d));

}

int sum(int a, int b) {

return a + b;

}When this code is run, the JVM will figure out that it can replace it with a more effective, so called “inlined” code:

int sum(int a, int b, int c, int d) {

return a + b + c + d;

}You have to pay attention that this optimization is done by the virtual machine and not by the compiler. It is not transparent at the first place why this decision was made. After all – if you look at the sample code above – why postpone optimization when compilation can produce more efficient bytecode? But considering also other not-so obvious cases, JVM is the best place to carry out the optimization:

- JVM is equipped with runtime data besides static analysis. During runtime JVM can make better decisions based on what methods are executed most often, what loads are redundant, when is it safe to use copy propagation, etc.

- JVM has got information about the underlying architecture – number of cores, heap size and configuration and can thus make the best selection based on this information.

But let us see those assumptions in practice. I have created a small test application which uses several different ways to add together 1024 integers.

- A relatively reasonable one, where the implementation just iterates over the array containing 1024 integers and sums the result together. This implementation is available in InlineSummarizer.java.

- Recursion based divide-and-conquer approach. I take the original 1024 – element array and recursively divide it into halves – the first recursion depth thus gives me two 512-element arrays, the second depth has four 256-element arrays and so forth. In order to sum together all the 1024 elements I introduce 1023 additional method invocations. This implementation is attached as RecursiveSummarizer.java.

- Naive divide-and-conquer approach. This one also divides the original 1024-element array, but via calling additional instance methods on the separated halves – namely I nest sum512(), sum256(), sum128(), …, sum2() calls until I have summarized all the elements. As with recursion, I introduce 1023 additional method invocations in the source code.

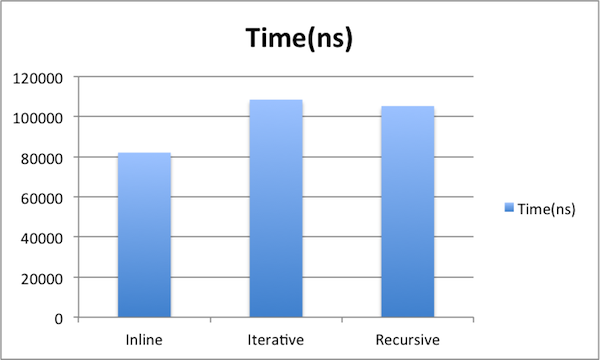

And I have a test class to run all those samples. The first results are from unoptimized code:

As seen from the above, the inlined code is the fastest. And the ones where we have introduced 1023 additional method invocations are slower by ~25,000ns. But this image has to be interpreted with a caveat – it is a snapshot from the runs where JIT has not yet fully optimized the code. In my mid-2010 MB Pro it took between 200 and 3000 runs depending on the implementation.

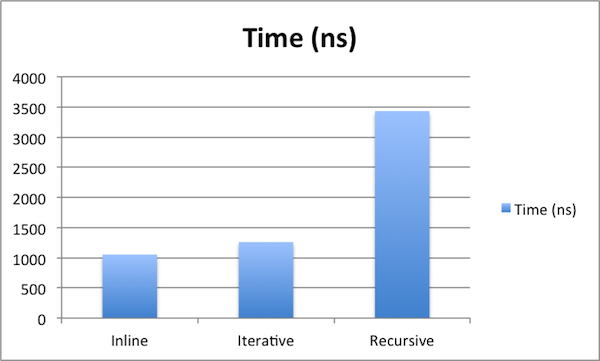

The more realistic results are below. I have ran all the summarizer implementations for more than 1,000,000 times and discarded the runs where JIT has not yet managed to perform it’s magic.

We can see that even though inlined code still performed best, the iterative approach also flew at a decent speed. But recursion is notably different – when iterative approach close in with just 20% overhead, RecursiveSummarizer takes 340% of the time the inlined code needs to complete. Apparently this is something one should be aware of – when you use recursion, the JVM is helpless and cannot inline method calls. So be aware of this limitation when using recursion.

Recursion aside – method overheads are close to being non-existent. Just 205 ns difference between having 1023 additional method invocations in your source code. Remember, those were nanoseconds (10^-9 s) over there that we used for measurement. So thanks to JIT we can safely neglect most of the overhead introduced by method invocations. The next time when your coworker is hiding his lousy design decisions behind the statement that popping through a call stack is not efficient, let him go through a small JIT crash course first. And if you wish to be well-equipped to block his future absurd statements, subscribe to either our RSS or Twitter feed and we are glad to provide you future case studies.

Full disclosure: the inspiration for the test case used in this article was triggered by Tomasz Nurkiewicz blog post.

Reference: How expensive is a method call in Java from our JCG partner Nikita Salnikov Tarnovski at the Plumbr Blog blog.

“when you use recursion, the JVM is helpless”

You can note here that the scala compiler is capable of tuning a recursion into a loop if the recursion can be compiled with tail call optimization.